Difference between revisions of "Binding/Pinning"

| Line 25: | Line 25: | ||

This variable can hold two kinds of values: a name specifying (hardware) places, or a list that marks places. | This variable can hold two kinds of values: a name specifying (hardware) places, or a list that marks places. | ||

| − | {| class="wikitable" style="width: | + | {| class="wikitable" style="width:60%;" |

| Abstract name || Meaning | | Abstract name || Meaning | ||

|- | |- | ||

| Line 43: | Line 43: | ||

| 24 cores with one hardware thread each, starting at core 0 and using every 2nd core || <code>{0}:24:2</code> or <code>{0:1}:24:2</code> || <code>{0, 2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22}</code> | | 24 cores with one hardware thread each, starting at core 0 and using every 2nd core || <code>{0}:24:2</code> or <code>{0:1}:24:2</code> || <code>{0, 2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22}</code> | ||

|- | |- | ||

| − | | 12 cores with two hardware threads each, starting at the first two hardware threads on the first core ({0,1}) and using every 4th core || <code>{0,1}:12:4</code> or <code>{0:2}:12: | + | | 12 cores with two hardware threads each, starting at the first two hardware threads on the first core ({0,1}) and using every 4th core || <code>{0,1}:12:4</code> or <code>{0:2}:12:4</code> || <code>{{0,1}, {4,5}, {8,9}, {12,13}, {16,17}, {20,21}}</code> |

|} | |} | ||

Revision as of 10:44, 5 April 2018

Basics

Threads are "pinned" by setting certain OpenMP-related environment variables, which you can do with this command:

$ export <env_variable_name> = <value>

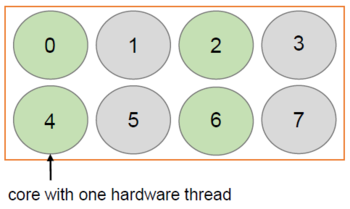

Pinning threads is an advanced way to control how your system distributes the threads across the available cores, with the purpose of improving the performance of your application or avoiding costly memory accesses by keeping the threads close to each other. The terms "thread pinning" and "thread affinity" are used interchangeably.

How to Pin Threads

OMP_PLACES is employed to specify places on the machine where the threads are put. However, this variable on its own does not determine thread pinning completely, because your system still won't know in what pattern to assign the threads to the given places. Therefore, you also need to set OMP_PROC_BIND.

OMP_PROC_BIND specifies a binding policy which basically sets criteria by which the threads are distributed.

If you want to get a schematic overview of your cluster's hardware, e. g. to figure out how many hardware threads there are, type: $ lstopo.

OMP_PLACES

This variable can hold two kinds of values: a name specifying (hardware) places, or a list that marks places.

| Abstract name | Meaning |

threads |

a place is a single hardware thread, i. e. the hyperthreading will be ignored |

cores |

a place is a single core with its corresponding amount of hardware threads |

sockets |

a place is a single socket |

In order to define specific places by an interval, OMP_PLACES can be set to <lowerbound>:<length>:<stride>.

All of these three values are non-negative integers and must not exceed your system's bounds. The value of <lowerbound> can be defined as a list of hardware threads. As an interval, <lowerbound> has this format: {<starting_point>:<length>} that can be a single place, or a place that holds several hardware threads, which is indicated by <length>.

| Example hardware | OMP_PLACES | Places |

| 24 cores with one hardware thread each, starting at core 0 and using every 2nd core | {0}:24:2 or {0:1}:24:2 |

{0, 2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22}

|

| 12 cores with two hardware threads each, starting at the first two hardware threads on the first core ({0,1}) and using every 4th core | {0,1}:12:4 or {0:2}:12:4 |

{{0,1}, {4,5}, {8,9}, {12,13}, {16,17}, {20,21}}

|

You can also determine these places with a comma-separated list. Say there are 8 cores available with one hardware thread each, and you would like to execute your application on the first four cores, you could define this: $ export OMP_PLACES={0, 1, 2, 3}

OMP_PROC_BIND

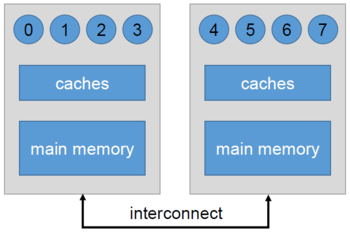

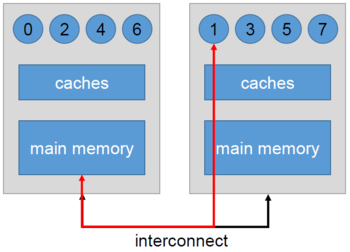

Now that you have set OMP_PROC_BIND, you can now define the order in which the places should be assigned. This is especially useful for NUMA systems because some threads may have to access remote memory, which will slow your application down significantly. If OMP_PROC_BIND is not set, your system will distribute the threads across the nodes and cores randomly.

| Value | Function |

true |

the threads should not be moved |

false |

the threads can be moved |

master |

worker threads are in the same partition as the master |

close |

worker threads are close to the master in contiguous partitions, e. g. if the master is occupying hardware thread 0, worker 1 will be placed on hw thread 1, worker 2 on hw thread 2 and so on |

spread |

workers are spread across the available places to maximize the space inbetween two neighbouring threads |