NUMA

NUMA (short for nun-uniform memory access) is a memory architecture which is popular in HPC. A typical cluster consists of hundreds of nodes where each individual node is a NUMA-system.

General

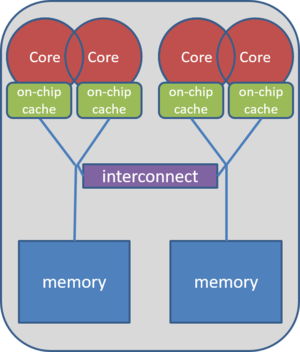

Desktop computers normally consist of one motherboard, one CPU die (with several cores) and one main memory. On the other hand, a NUMA system consists of one motherboard with several sockets and each socket holds one CPU die. Furthermore, each socket is local to a certain part of the main memory. A NUMA system is still a shared memory system, which means that every core on every socket can access each part of the main memory. However, accessing a non-local part of the main memory takes longer because a special interconnect has to be used (hence the name NUMA).

Advantages

NUMA replaced the older SMP (symmetric multiprocessing, sometimes also called UMA) in most HPC clusters because of several reasons:

- NUMA systems can contain more CPU cores.

- NUMA systems can have a larger main memory.

- NUMA systems have a higher possible bandwidth.

Without NUMA, there is only limited space which is close to the main memory. In NUMA systems, the main memory can be split into several parts. This allows more cores and overall more main memory. The last advantage is an inherent property of the design. As long as all cores only access local main memory, each socket can access its own main memory simultaneously with the same bandwidth.

Pitfalls

Due to the more complex design of NUMA, there exist two pitfalls for a programmer which is unaware of the NUMA architecture. These pitfalls may result in code which does not scale well. Both are caused by a extensive usage of the interconnect, which may prove to be a huge bottleneck.

The first problem is thread migration. In general, the operating system is allowed to move threads (or processes) between cores if it detects that the cores have different workloads. This can improve load balancing but causes additional costs. However, this is usually not wanted on NUMA systems. The reason is that if a thread which works on data in the core's local main memory gets moved to a different socket, it will get separated from its data. This means that the migrated thread has to use the slow interconnect all the time. The solution to this problem is pinning.

The second problem is data placement. If the programmer allocates the memory unaware of the NUMA architecture, all threads which are located on non-local sockets have to use the interconnect. The solution is provided with the so-called first-touch policy. Since the operating system is aware of the NUMA architecture, it allocates the memory local to the threads which first "touched" the memory.

The following example shows a simple NUMA-aware array addition:

#pragma omp parallel for

for(int i = 0; i < N; i++)

{

a[i] = 0.0;

b[i] = i;

}

#pragma omp parallel for

{

a[i] = a[i] + b[i];

}

Assuming thread affinity (pinning) was defined correctly, the first loop will distribute the arrays a and b across the different parts of the main memory in a way that each thread participating is local to its own chunk of data. This way, the addition only requires to access local data.

Further Reading

https://software.intel.com/en-us/articles/optimizing-applications-for-numa