Difference between revisions of "Parallel Programming"

m |

m |

||

| Line 9: | Line 9: | ||

In the computer this translates to multiple cores having equal access to the same memory as depicted. This has the advantage, that there is generally very little communication overhead, since every core can write to every memory location and the communication is therefore implicit. Futhermore parallelising an existing sequential (= not parallel) program is commonly straight forward and very easy to implement, if the underlying problem allows parallelisation. As can be seen in the picture, it is not practical to attach more and more cores to the memory and therefore this paradigm is limited by how many cores you can fit into one computer (a few hundred are a good estimate). | In the computer this translates to multiple cores having equal access to the same memory as depicted. This has the advantage, that there is generally very little communication overhead, since every core can write to every memory location and the communication is therefore implicit. Futhermore parallelising an existing sequential (= not parallel) program is commonly straight forward and very easy to implement, if the underlying problem allows parallelisation. As can be seen in the picture, it is not practical to attach more and more cores to the memory and therefore this paradigm is limited by how many cores you can fit into one computer (a few hundred are a good estimate). | ||

| − | This paradigm is implemented by e.g. [[OpenMP]]. | + | This paradigm is implemented by e.g. [[OpenMP|Open Memory Programming]]. |

| Line 19: | Line 19: | ||

Distributed Memory is similar to the way how multiple humans interact with problems: every process 'works' on it's own and can communicate with the others by sending messages (talking and listening). | Distributed Memory is similar to the way how multiple humans interact with problems: every process 'works' on it's own and can communicate with the others by sending messages (talking and listening). | ||

| − | In a computer or a cluster of computers every core works on it's own and has a way (e.g. [[MPI]]) to communicate with the other cores. Sending messages | + | In a computer or a cluster of computers every core works on it's own and has a way (e.g. the [[MPI|Message Passing Interface (MPI)]]) to communicate with the other cores. This messaging can happen within a CPU between multiple cores, utilize a high speed network between the computers (nodes) of a supercomputer, or theoretically even happen over the internet. This Sending and receiving messages is often harder to implement for the developer and sometimes even requires a major rewrite/restructure of existing code. However, it has the advantage, that it can be scaled to more computers (nodes), since every process has it's own memory and can communicate over [[MPI]] with the other processes. The limiting factor here is the speed and characteristics of the physical network, connecting the different nodes. |

| + | |||

| + | The communication pattern is depicted with a sparse and a dense network. In a sparse network, messages have to be forwarded by sometimes multiple cores to reach their destination. The more connections there are, the lower this amount of forwarding gets, which reduces average latency and overhead and increases throughput/scalability. | ||

This way the communication can be designed carefully to exploit the architecture to the fullest extend and, while having some non-negligible overhead, can theoretically scale pretty high, being only limited by the network connecting the nodes. | This way the communication can be designed carefully to exploit the architecture to the fullest extend and, while having some non-negligible overhead, can theoretically scale pretty high, being only limited by the network connecting the nodes. | ||

Revision as of 10:49, 29 March 2018

In order to solve a problem faster, the work is executed in parallel, as mentioned in Getting Started. To achieve this, one usually uses either a Shared_Memory or a Distributed_Memory programming model.

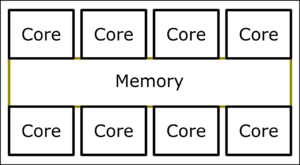

Shared Memory programming works like the communication via a pin board. There is one shared memory (pin-board in the analogy) where everybody can see what everybody is doing and how far they have gotten or which results (the bathroom is already clean) they got. Similar to the physical world, there are logistical limits on many people can use the memory (pin board) efficiently and how big it can be.

In the computer this translates to multiple cores having equal access to the same memory as depicted. This has the advantage, that there is generally very little communication overhead, since every core can write to every memory location and the communication is therefore implicit. Futhermore parallelising an existing sequential (= not parallel) program is commonly straight forward and very easy to implement, if the underlying problem allows parallelisation. As can be seen in the picture, it is not practical to attach more and more cores to the memory and therefore this paradigm is limited by how many cores you can fit into one computer (a few hundred are a good estimate).

This paradigm is implemented by e.g. Open Memory Programming.

Distributed Memory

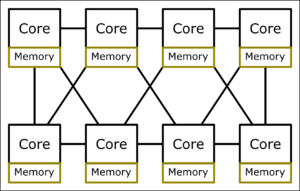

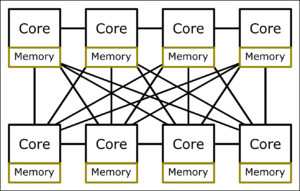

Distributed Memory is similar to the way how multiple humans interact with problems: every process 'works' on it's own and can communicate with the others by sending messages (talking and listening).

In a computer or a cluster of computers every core works on it's own and has a way (e.g. the Message Passing Interface (MPI)) to communicate with the other cores. This messaging can happen within a CPU between multiple cores, utilize a high speed network between the computers (nodes) of a supercomputer, or theoretically even happen over the internet. This Sending and receiving messages is often harder to implement for the developer and sometimes even requires a major rewrite/restructure of existing code. However, it has the advantage, that it can be scaled to more computers (nodes), since every process has it's own memory and can communicate over MPI with the other processes. The limiting factor here is the speed and characteristics of the physical network, connecting the different nodes.

The communication pattern is depicted with a sparse and a dense network. In a sparse network, messages have to be forwarded by sometimes multiple cores to reach their destination. The more connections there are, the lower this amount of forwarding gets, which reduces average latency and overhead and increases throughput/scalability.

This way the communication can be designed carefully to exploit the architecture to the fullest extend and, while having some non-negligible overhead, can theoretically scale pretty high, being only limited by the network connecting the nodes.