Difference between revisions of "Parallel Programming"

| (23 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

| − | In order to solve a problem faster, work can | + | [[Category:HPC-User]] |

| + | In order to solve a problem faster, or to compute larger data sets as otherwise possible, work can often be dissected in pieces and executed in parallel, as mentioned in [[Getting_Started#Parallel_Programming_or_.22How-To-Use-More-Than-One-Core.22|Getting Started]]. | ||

| + | |||

| + | '''There are very many kinds of parallelization.''' | ||

| + | |||

| + | In the ideal case doubling the number of execution units the runtime is cut in half. There are several reasons why the ideal case usually is not met, a few of them are: | ||

| + | * Overhead because of synchronization and communication. | ||

| + | * Bottlenecks in the parallel computer design, e.g. memory or network bandwidth limitations. | ||

| + | * Load imbalances; one processor has more work than the others causing them to wait. | ||

| + | * Serial parts in the program which may not be parallelized at all [[Amdahl's_Law|Amdahl's Law]]. | ||

| + | |||

| + | All of the parallelization approaches can be coarsely classified to be of [[#Distributed_Memory|Distributed Memory]] (DM) or [[#Shared_Memory|Shared Memory]] (SM) class. The Distributed Memory paradigms owns the ultimate feature to work beyond frontiers of a physical node / operating system instance, opening the possibility to utilize much more of (potentially cheaper) hardware. However the used network is crucial for the DM performance and scalability. Note that a Distributed Memory approach typically can be used also on a Shared Memory system. Through unremittingly development some approaches previously known to be DM get features related to SM, and on the other side attemps are made to make SM approaches be runnable over DM clusters, making a clean dichotomy complicated up to impossible in many cases. | ||

| + | |||

| + | In the context of HPC those well-known approaches can be itemised (the list is not final!): | ||

| + | * [[#Distributed_Memory| Distributed Memory]] | ||

| + | ** [[MPI|Message Passing Interface]] (MPI) | ||

| + | ** [https://en.wikipedia.org/wiki/Partitioned_global_address_space PGAS] languages ([https://software.intel.com/content/www/us/en/develop/articles/intel-c-compiler-for-linux-building-upc-to-utilize-the-intel-c-compiler.html UPC], [https://software.intel.com/content/www/us/en/develop/articles/distributed-memory-coarray-fortran-with-the-intel-fortran-compiler-for-linux-essential.html Coarray Fortran]) | ||

| + | ** accelerators and other devices, like [[Building_LLVM/Clang_with_OpenMP_Offloading_to_NVIDIA_GPUs|GPGPU]] - latest [[OpenMP|OpenMP]] standards introduce offloading | ||

| + | * [[#Shared_Memory|Shared Memory]] | ||

| + | ** [[OpenMP|OpenMP]] (not to be mixed with [https://www.open-mpi.org/ Open MPI] wich is an MPI implementation) | ||

| + | ** The Pthreads library | ||

| + | ** Auto-parallelization (compiler-introduced threading) | ||

| + | ** [[Computer_architecture_for_software_developers|Vectorisation]], pipelinig et alii | ||

| + | |||

| + | |||

| + | Following is a short description of the basic concept of Shared Memory Systems and Distributed Memory Systems. Information on how to start/run/use an exisiting parallel code can be found in the [[OpenMP]] or [[MPI]] article. | ||

| + | |||

| + | |||

| + | __TOC__ | ||

| Line 5: | Line 33: | ||

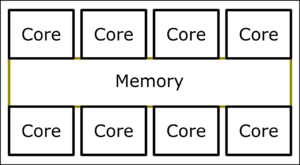

[[File:Shared_Memory.png|thumb|300px|Schematic of shared memory]] | [[File:Shared_Memory.png|thumb|300px|Schematic of shared memory]] | ||

| − | Shared Memory programming works like the communication of multiple people, who are cleaning a house, via a pin board. There is one shared memory (pin-board in the analogy) where everybody can see what everybody is doing and how far they have gotten or which results (the bathroom is already clean) they got. Similar to the physical world, there are logistical limits on many parallel units (people) can use the memory (pin board) efficiently and how big it can be. | + | Shared Memory programming works like the communication of multiple people, who are cleaning a house, via a pin board. There is one shared memory (pin-board in the analogy) where everybody can see what everybody is doing and how far they have gotten or which results (the bathroom is already clean) they got. Similar to the physical world, there are logistical limits on how many parallel units (people) can use the memory (pin board) efficiently and how big it can be. |

| − | In the computer this translates to multiple cores having joint access to the same shared memory as depicted. This has the advantage, that there is generally very little communication overhead, since every core can write to every memory location and the communication is therefore implicit. Futhermore parallelising an existing sequential (= not parallel) program is commonly straight forward and very easy to | + | In the computer this translates to multiple cores having joint access to the same shared memory as depicted. This has the advantage, that there is generally very little communication overhead, since every core can write to every memory location and the communication is therefore implicit. (However due to [[NUMA]] access may not be equally fast from any core to any memory location.) Futhermore parallelising an existing sequential (= not parallel) program is commonly straight forward and very easy to start, if the underlying problem allows parallelisation at all. As can be seen in the picture, it is not practical to attach more and more cores to the same memory, because it can only serve a limited number of cores with data efficiently at the same time. Therefore this paradigm is limited by how many cores can fit into one computer (a few hundred is a good estimate). |

| − | |||

| − | |||

| + | For parallelizing applications, which plan on running on Shared Memory systems, the explicit distribution of work over the processors by compiler directives [[OpenMP|Open Memory Programming (OpenMP)]] is commonly used in the HPC community. Autoparallelization (automatic distribution of loop iterations over several processors) by the compiler is worth a try for modest codes - it is just a compiler parameter which may give you (or may not) some speedup 'for free'. | ||

== Distributed Memory == | == Distributed Memory == | ||

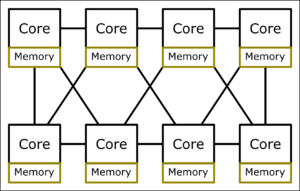

| − | [[File:Distributed_Memory_sparse.png|thumb|300px|Schematic of distributed memory with sparse network]] | + | [[File:Distributed_Memory_sparse.png|thumb|300px|Schematic of a distributed memory system with a sparse network]] |

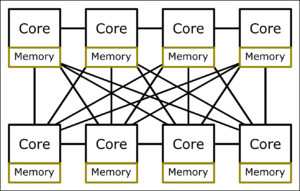

| − | [[File:Distributed_Memory_dense.png|thumb|300px|Schematic of distributed memory with dense network]] | + | [[File:Distributed_Memory_dense.png|thumb|300px|Schematic of a distributed memory system with a dense network]] |

Distributed Memory is similar to the way how multiple humans interact while solving problems: every process (person) 'works' on it's own and can communicate with the others by sending messages (talking and listening). | Distributed Memory is similar to the way how multiple humans interact while solving problems: every process (person) 'works' on it's own and can communicate with the others by sending messages (talking and listening). | ||

| − | In a computer or a cluster of computers every core works on it's own and has a way (e.g. the [[MPI|Message Passing Interface (MPI)]]) to communicate with the other cores. This messaging can happen within a CPU between multiple cores, utilize a high speed network between the computers (nodes) of a supercomputer, or theoretically even happen over the internet. This sending and receiving of messages is often harder to implement for the developer and sometimes even requires a major rewrite/restructure of existing code. However, it has the advantage, that it can be scaled to more computers (nodes), since every process has it's own memory and can communicate over [[MPI]] with the other processes. The limiting factor here is the speed and characteristics of the physical network, connecting the different nodes. | + | In a computer or a cluster of computers every core works on it's own and has a way (e.g. the [[MPI|Message Passing Interface (MPI)]]) to communicate with the other cores. This messaging can happen within a CPU between multiple cores, utilize a high speed network between the computers (nodes) of a supercomputer, or theoretically even happen over the internet. This sending and receiving of messages is often harder to implement for the developer and sometimes even requires a major rewrite/restructure of existing code or even modifications on algorithms. However, it has the advantage, that it can be scaled to more computers (nodes), since every process has it's own memory and can communicate over [[MPI]] with the other processes. The limiting factor here is the speed and characteristics of the physical network, connecting the different nodes. |

| + | |||

| + | The communication pattern is depicted with a sparse and a dense network. In a sparse network, messages have to be forwarded by sometimes multiple cores to reach their destination. The more connections there are, the lower this amount of forwarding gets, which reduces average latency and overhead and increases throughput and scalability. | ||

| − | + | Since every communication is explicitly coded, this communication pattern can be designed carefully to exploit the architecture and the available nodes to their fullest extend. It follows, that in theory the application can scale as high as the underlying problem allows, being only limited by the network connecting the nodes and the overhead for sending/receiving messages. | |

| − | + | For large applications the '''hybrid parallelization''' approach, a combination of coarse-grained parallelism with MPI and underlying fine-grained parallelism with OpenMP, might be attractive, in order to use as many processors efficiently as possible. | |

== Should I use Distributed Memory or Shared Memory? == | == Should I use Distributed Memory or Shared Memory? == | ||

| − | This really depends on the problem at hand. If the problem is parallelizable, the required computing power is a good indicator. When a few to a hundred cores should suffice, [[OpenMP]] is (for existing codes) commonly the easiest alternative. However, if thousands or even millions of cores are required, there is not really a way around [[MPI]]. To give a better overview, different pros and cons are listed in the table below: | + | This really depends on the problem at hand. If the problem is parallelizable, the required computing power is a good indicator. When a few to a hundred cores should suffice, [[OpenMP]] is (for existing codes) commonly the easiest alternative. In many cases only a few lines of OpenMP codes are needed, whereas MPI is a lot more tedious and often require a whole redesign of the program or either used algorithm. However, if thousands or even millions of cores are required, or if the data set does not fit into the memory of a biggest single node available, there is not really a way around [[MPI]]. A combination of MPI and OpenMP may be advantageous, especially for applications with more than one level of parallelism. To give a better overview, different pros and cons are listed in the table below: |

| − | {| class="wikitable" style=" | + | {| class="wikitable" style="" |

!colspan="2" | Shared Memory ([[OpenMP]]) || colspan="2"| Distributed Memory ([[MPI]]) | !colspan="2" | Shared Memory ([[OpenMP]]) || colspan="2"| Distributed Memory ([[MPI]]) | ||

|- | |- | ||

! Pros || Cons || Pros || Cons | ! Pros || Cons || Pros || Cons | ||

|- | |- | ||

| − | | Easy to | + | | Easy to start || scales only to '''1''' node || scales across multiple nodes || harder to implement |

|- | |- | ||

| shared variables || inherent data races || no inherent data races || no shared variables | | shared variables || inherent data races || no inherent data races || no shared variables | ||

|- | |- | ||

| − | | low overhead || || rowspan="2"| each MPI process can utilize OpenMP, | + | | low-overhead apps ''possible'' || typically bad performace of ''first attempt'' || rowspan="2"| each MPI process can utilize OpenMP, |

resulting in a hybrid application | resulting in a hybrid application | ||

| some overhead | | some overhead | ||

|- | |- | ||

| − | | | + | | || || needs a library, complicated start-up |

|} | |} | ||

| Line 47: | Line 76: | ||

== References == | == References == | ||

| − | + | Difference between SM und DM in a concrete example in [[Media:OpenMP_and_MPI_for_Dummies-C.pdf | C (PDF)]] and [[Media:OpenMP_and_MPI_for_Dummies-Fortran.pdf | Fortran (PDF)]] | |

Latest revision as of 16:23, 6 April 2022

In order to solve a problem faster, or to compute larger data sets as otherwise possible, work can often be dissected in pieces and executed in parallel, as mentioned in Getting Started.

There are very many kinds of parallelization.

In the ideal case doubling the number of execution units the runtime is cut in half. There are several reasons why the ideal case usually is not met, a few of them are:

- Overhead because of synchronization and communication.

- Bottlenecks in the parallel computer design, e.g. memory or network bandwidth limitations.

- Load imbalances; one processor has more work than the others causing them to wait.

- Serial parts in the program which may not be parallelized at all Amdahl's Law.

All of the parallelization approaches can be coarsely classified to be of Distributed Memory (DM) or Shared Memory (SM) class. The Distributed Memory paradigms owns the ultimate feature to work beyond frontiers of a physical node / operating system instance, opening the possibility to utilize much more of (potentially cheaper) hardware. However the used network is crucial for the DM performance and scalability. Note that a Distributed Memory approach typically can be used also on a Shared Memory system. Through unremittingly development some approaches previously known to be DM get features related to SM, and on the other side attemps are made to make SM approaches be runnable over DM clusters, making a clean dichotomy complicated up to impossible in many cases.

In the context of HPC those well-known approaches can be itemised (the list is not final!):

- Distributed Memory

- Message Passing Interface (MPI)

- PGAS languages (UPC, Coarray Fortran)

- accelerators and other devices, like GPGPU - latest OpenMP standards introduce offloading

- Shared Memory

- OpenMP (not to be mixed with Open MPI wich is an MPI implementation)

- The Pthreads library

- Auto-parallelization (compiler-introduced threading)

- Vectorisation, pipelinig et alii

Following is a short description of the basic concept of Shared Memory Systems and Distributed Memory Systems. Information on how to start/run/use an exisiting parallel code can be found in the OpenMP or MPI article.

Shared Memory programming works like the communication of multiple people, who are cleaning a house, via a pin board. There is one shared memory (pin-board in the analogy) where everybody can see what everybody is doing and how far they have gotten or which results (the bathroom is already clean) they got. Similar to the physical world, there are logistical limits on how many parallel units (people) can use the memory (pin board) efficiently and how big it can be.

In the computer this translates to multiple cores having joint access to the same shared memory as depicted. This has the advantage, that there is generally very little communication overhead, since every core can write to every memory location and the communication is therefore implicit. (However due to NUMA access may not be equally fast from any core to any memory location.) Futhermore parallelising an existing sequential (= not parallel) program is commonly straight forward and very easy to start, if the underlying problem allows parallelisation at all. As can be seen in the picture, it is not practical to attach more and more cores to the same memory, because it can only serve a limited number of cores with data efficiently at the same time. Therefore this paradigm is limited by how many cores can fit into one computer (a few hundred is a good estimate).

For parallelizing applications, which plan on running on Shared Memory systems, the explicit distribution of work over the processors by compiler directives Open Memory Programming (OpenMP) is commonly used in the HPC community. Autoparallelization (automatic distribution of loop iterations over several processors) by the compiler is worth a try for modest codes - it is just a compiler parameter which may give you (or may not) some speedup 'for free'.

Distributed Memory

Distributed Memory is similar to the way how multiple humans interact while solving problems: every process (person) 'works' on it's own and can communicate with the others by sending messages (talking and listening).

In a computer or a cluster of computers every core works on it's own and has a way (e.g. the Message Passing Interface (MPI)) to communicate with the other cores. This messaging can happen within a CPU between multiple cores, utilize a high speed network between the computers (nodes) of a supercomputer, or theoretically even happen over the internet. This sending and receiving of messages is often harder to implement for the developer and sometimes even requires a major rewrite/restructure of existing code or even modifications on algorithms. However, it has the advantage, that it can be scaled to more computers (nodes), since every process has it's own memory and can communicate over MPI with the other processes. The limiting factor here is the speed and characteristics of the physical network, connecting the different nodes.

The communication pattern is depicted with a sparse and a dense network. In a sparse network, messages have to be forwarded by sometimes multiple cores to reach their destination. The more connections there are, the lower this amount of forwarding gets, which reduces average latency and overhead and increases throughput and scalability.

Since every communication is explicitly coded, this communication pattern can be designed carefully to exploit the architecture and the available nodes to their fullest extend. It follows, that in theory the application can scale as high as the underlying problem allows, being only limited by the network connecting the nodes and the overhead for sending/receiving messages.

For large applications the hybrid parallelization approach, a combination of coarse-grained parallelism with MPI and underlying fine-grained parallelism with OpenMP, might be attractive, in order to use as many processors efficiently as possible.

This really depends on the problem at hand. If the problem is parallelizable, the required computing power is a good indicator. When a few to a hundred cores should suffice, OpenMP is (for existing codes) commonly the easiest alternative. In many cases only a few lines of OpenMP codes are needed, whereas MPI is a lot more tedious and often require a whole redesign of the program or either used algorithm. However, if thousands or even millions of cores are required, or if the data set does not fit into the memory of a biggest single node available, there is not really a way around MPI. A combination of MPI and OpenMP may be advantageous, especially for applications with more than one level of parallelism. To give a better overview, different pros and cons are listed in the table below:

| Shared Memory (OpenMP) | Distributed Memory (MPI) | ||

|---|---|---|---|

| Pros | Cons | Pros | Cons |

| Easy to start | scales only to 1 node | scales across multiple nodes | harder to implement |

| shared variables | inherent data races | no inherent data races | no shared variables |

| low-overhead apps possible | typically bad performace of first attempt | each MPI process can utilize OpenMP,

resulting in a hybrid application |

some overhead |

| needs a library, complicated start-up | |||

So in sum it really depends and if you are unsure, have a chat with your local HPC division, check out the example in the References or head over to the Support.

References

Difference between SM und DM in a concrete example in C (PDF) and Fortran (PDF)