Resource planning

Resource planning is an essential part of creating and running programs utilizing Parallel Programming.

General

When running a program using OpenMP or MPI one has to consider which and how many resources one needs, especially when submitting a job. As resources are limited, actions like reserving and therefore blocking two nodes when only running on one of them, will result in major delays for other jobs for no reason at all.

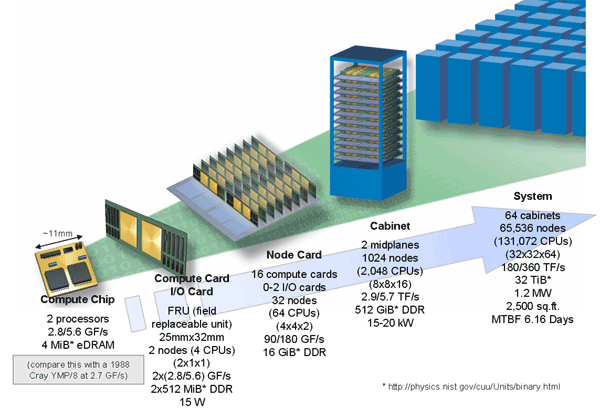

A basic understanding of the package hierarchy of supercomputers is needed. As an example the following picture shows the package hierarchy of the IBM Blue Gene.

Resources

The following resources are typically to be considered. Specific programs may require additional resources.

Number of cores

When deciding on the number of cores to be used the first step is to look at the hardware and architecture that the program will be run on (Site-specific documentation), especially the number of cores per node. Most jobs are run exclusively, meaning the entire node is blocked regardless of how many cores are actually used. One should therefore try and utilize the entire node. Also, for jobs requiring more than one node one should aim to distribute the work evenly. One should also consider the parallel efficiency of the program, i.e. how many cores can be utilized efficiently.

Memory

The amount of memory needed may vary with the number of cores depending on whether there is strong or weak scaling. In case of strong scaling the input remains constant with increasing number of cores to achieve the same work with a shorter runtime while with weak scaling the input is scaled up with the number of cores to finish more work with the same runtime. Furthermore, private variables of greater size must be considered, e.g. using 4 OpenMP threads which all require a private instance of a large array will require 4 times the memory. It is again important to check the hardware limitations, e.g. maximum memory per node.

Depending on the system and its defaults one may have to alter the stack size manually.

Special Hardware

Here one again has to consider the hardware and architecture of the system to be used (Site-specific documentation) if you need specific hardware, e.g. a GPU. Please note that certain permissions may be necessary.

Runtime

The estimated runtime usually does not have an impact in terms of being resourceful as a job finishing earlier than estimated does not delay the next one. However, it may strongly influence the waiting time before the job is granted its resources by the scheduler, depending on its strategy. Longer jobs are typcially given a lower priority or executed only during low workload hours (e.g. nights, weekends). One should therefore try to make a fairly accurate estimation of the runtime to shorten the wait (obviously without risking termination before completion).