Difference between revisions of "Computer architecture for software developers"

(→�) |

(→�) |

||

| Line 5: | Line 5: | ||

A stored program computer is controlled by instructions, the set of instructions a computer supports is also called instruction set architecture (ISA). Instructions are elementary operations as adding two numbers, loading data from memory or jumping to another location in the program code. Instructions are just integer numbers and are stored in a unified memory that also holds the actual data used by a program. A program consists of a sequential stream of instructions loaded from memory that control the hardware. The primary resource (or work) of a computer is therefore instruction execution. Some of those instructions load or store data from memory. To transfer data from memory is therefor a secondary resource of a computer triggered by instruction execution. The reason why this design was so successful lies in its generic application to any problem and the possibility to switch very fast between different applications. Everything in a computer is built of switches and as a consequence all numbers are represented in binary format. Because switches only know two states the operation of a computer is strictly clocked running with a certain fixed frequency. This generic nature of using a programmable machine together with extreme miniaturisation of the hardware enabled the application of computers in all aspects of our life. | A stored program computer is controlled by instructions, the set of instructions a computer supports is also called instruction set architecture (ISA). Instructions are elementary operations as adding two numbers, loading data from memory or jumping to another location in the program code. Instructions are just integer numbers and are stored in a unified memory that also holds the actual data used by a program. A program consists of a sequential stream of instructions loaded from memory that control the hardware. The primary resource (or work) of a computer is therefore instruction execution. Some of those instructions load or store data from memory. To transfer data from memory is therefor a secondary resource of a computer triggered by instruction execution. The reason why this design was so successful lies in its generic application to any problem and the possibility to switch very fast between different applications. Everything in a computer is built of switches and as a consequence all numbers are represented in binary format. Because switches only know two states the operation of a computer is strictly clocked running with a certain fixed frequency. This generic nature of using a programmable machine together with extreme miniaturisation of the hardware enabled the application of computers in all aspects of our life. | ||

| − | Memory is organised in a linear fashion using so called addresses, integer numbers to reference a specific memory location. As the basic unit | + | Memory is organised in a linear fashion using so called addresses, integer numbers to reference a specific memory location. As the basic unit 8 bits also called a byte is used. |

Revision as of 11:58, 3 July 2019

Introduction

Modern computers are still build following the "Stored Program Computing" design. The idea for stored program computers originates in the second world war, first working systems where build in the late fourties. Several earlier innovations enabled this machine: the idea of a universal Turing machine (1936), Claude E. Shannon's Master thesis on using arrangements of relays to solve Boolean algebra problems (1937) and Konrad Zuse anticipated in two patent applications that machine instructions could be stored in the same storage used for data (1936).

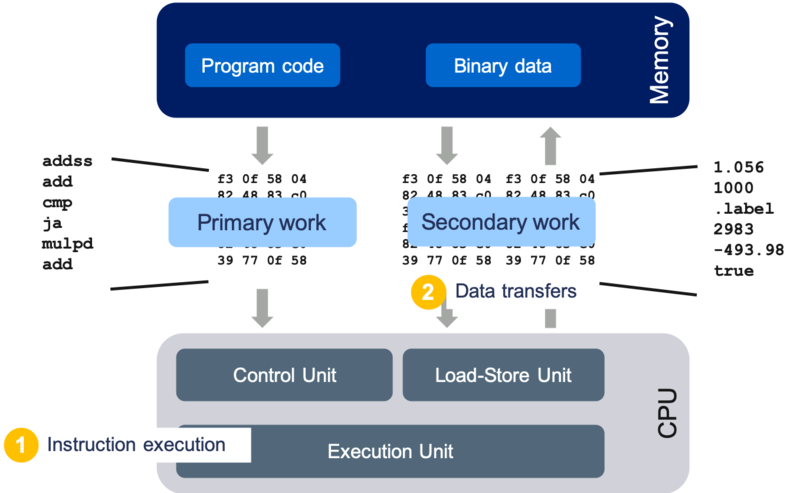

A stored program computer is controlled by instructions, the set of instructions a computer supports is also called instruction set architecture (ISA). Instructions are elementary operations as adding two numbers, loading data from memory or jumping to another location in the program code. Instructions are just integer numbers and are stored in a unified memory that also holds the actual data used by a program. A program consists of a sequential stream of instructions loaded from memory that control the hardware. The primary resource (or work) of a computer is therefore instruction execution. Some of those instructions load or store data from memory. To transfer data from memory is therefor a secondary resource of a computer triggered by instruction execution. The reason why this design was so successful lies in its generic application to any problem and the possibility to switch very fast between different applications. Everything in a computer is built of switches and as a consequence all numbers are represented in binary format. Because switches only know two states the operation of a computer is strictly clocked running with a certain fixed frequency. This generic nature of using a programmable machine together with extreme miniaturisation of the hardware enabled the application of computers in all aspects of our life.

Memory is organised in a linear fashion using so called addresses, integer numbers to reference a specific memory location. As the basic unit 8 bits also called a byte is used.

Illustration of Stored Program Computers:

The generic nature of stored program computers is also their biggest problem when it comes to speeding up execution of program code. As a program code can be any mix of supported instructions the properties of software and how it interacts with the hardware can vastly differ. Computer architects have chosen a pragmatic approach to speed up execution: "Make the common case fast". They analyse the software that runs on a computer and come up with improvements for this class of software. This also means that every hardware improvement makes assumptions against the software. Since chips are designed by profit-oriented companies other influences have to be considered as, e.g. technical opportunities as well as economical and marketing concerns.

In the following computer architecture is explained on a level that is relevant for software developers to understand the interaction of their software with the hardware.

Core architecture

Clock frequency

To increase the performance by increasing the clock frequency is the basic way of speeding up a stored program computer. Everything works faster and all codes will benefit. This was the main driver for performance until 2005. The ability to increase the frequency is strongly connected to instruction level parallelism techniques (see later). Why vendors are not able anymore to increase the frequency has multiple reasons but at the end it boils down to the physical limitation to dissipate the heat a chip generates with reasonable technical effort.

While clock frequency increase is not a main driver anymore it is still a technique employed in different ways. On one hand as one of two features (core count being the second) to rank of a processors according to price and on the other hand in form of the dynamic overclocking feature apparent in all modern processors.

Processors already support to dynamically decrease the clock to save power for a long time. Newer multicore processors in addition are capable of dynamically overclocking beyond the nominal frequency. On Intel processors this feature is called "turbo mode". Turbo mode is a flavour of so called "dark silicon" optimisations. A chip has a thermal design power (TDP). The TDP is the maximum heat the chip can dissipate. The power a chip consumes depends on the frequency and the square of the voltage it is operated with and of course the total number of transistors and wires active. There is a minimum voltage the chip can be operated with using a given frequency. If not all cores are used, parts of the chip can be turned off. This gives enough power headroom to increase the voltage and frequency and still stay within the TDP. The overclocking happens dynamically and is controlled by the hardware itself.

Many technological advances in processor designs are targeted on managing to stay within the TDP and still reach high performance. This is reflected in different clock domains for cores and uncore and special SIMD width specific turbo mode clocks. If a code uses wider SIMD units more transistors and more important wires are active, and the chip can therefore only run at a lower peak frequency.

As a consequence of its clocked operation a single clock cycle is a common time unit within processors.

Instruction level parallelism

Stored program computers process a sequential instruction stream, the order of the instructions is fixed. Already early in computer history (1956, IBM 7030) computers exploited the opportunity to execute instruction in parallel. It is till today a main technology to generate higher performance. A prerequisite for instruction level parallelism is that there are enough independent instructions in the sequential instruction stream. The common metric for quantifying instruction throughput is CPI (cycles per instruction) or its inverse IPC (instructions per cycle).

Instruction pipelining

In instruction pipelining the execution of instructions is split into a series of sequential steps. Very similar to pipelining in car manufacturing this enables to work on different instruction in the different stages at the same time. The execution of an instruction is decomposed into smaller steps which enables to increase the clock frequency the pipeline is operated with. The execution of every single instruction still takes the same time but as soon as the pipeline is full every single cycle an instruction is finished. How many cycles it takes to execute one single instruction is the instruction latency while the total number is the instruction throughput. The optimal throughput for a single pipeline is one instruction per cycle (CPI=1).

Superscalar execution

Superscalar execution is the second major implementation of instruction level parallelism. Essentially the hardware can execute more than one instruction per cycle. The first superscalar processor was introduced shortly after the first pipelined processor in 1966 (CDC 6600). A superscalar processor has multiple execution units which can be active in the same cycle. The optimal throughput is a number larger than one. Typical implementations in modern processors are 4-way superscalar (CPI=0.25).

Supporting technologies

Instruction level parallelism can be controlled by hardware or by software. Modern processors typically rely on hardware control. Because typical application codes expose very limited instruction level parallelism if executed in order and/or have only few instructions between jumps to other instruction addresses.

- Out-of-order execution

- Branch prediction

- Speculative execution

Memory hierarchy

Simultaneous multithreading

Data parallel execution units (SIMD)

Putting it all together

Node architecture

Multicore chips

Cache-coherent non-uniform memory architecture (ccNUMA)

Node topology

System architecture

Links and further information

- John Hennessy David Patterson: Computer Architecture - A Quantitative Approach. Still the standard text book on computer architecture