Difference between revisions of "HPC-Dictionary"

| (33 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| + | [[Category:Basics]] | ||

== Unix == | == Unix == | ||

| Line 5: | Line 6: | ||

== File System == | == File System == | ||

| − | The file system | + | The file system allows for a hierarchical (directory or folder) structure of all data (files). On Unix-based systems, the top directory is <code>/</code> (the "root" directory), from which all other directories branch off into a tree-like structure. |

| + | Most of the time you will be working in directories starting with <code>/home/<username></code>, which represents the user's home directory. All directories and files below <code>/home/<username></code> can be freely modified at will by the owner. | ||

| − | == | + | ==== Local filesystems ==== |

| + | ... exist only on a node and cannot be read nor written to from other nodes.<br /> | ||

| + | Examples are <code>ext3/4</code>, <code>xfs</code> and <code>btrfs</code> in Linux. | ||

| − | An environment variable is a dynamic object on a computer, which stores a value. On Unix-based operating systems you can: | + | ==== Shared filesystems ==== |

| + | ... can be read and written to by ''all compute nodes'' in the cluster. A file created by any node can immediately be seen by any other node. Sometimes also known as ''global'' or ''cluster file system''.<br /> | ||

| + | Examples are the Network File System NFS, Lustre or IBM's GPFS (a.k.a. "Spectrum Scale"). | ||

| + | |||

| + | == Environment Variables == | ||

| + | |||

| + | An environment variable is a dynamic object on a computer, which stores a value. On Unix-based operating systems (bash) you can: | ||

* set the value of a variable with: <code>export <variable-name>=<value></code> | * set the value of a variable with: <code>export <variable-name>=<value></code> | ||

* read the value of a variable with: <code>echo $<variable-name></code> | * read the value of a variable with: <code>echo $<variable-name></code> | ||

| − | Environment variables can be referenced by software (or the user) to get or set information about the system. | + | Environment variables can be referenced by software (or the user) to get or set information about the system or the environment. See below for a few examples of environment variables, which might give you an idea for their use and usefulness. |

{| class="wikitable" | {| class="wikitable" | ||

| Line 40: | Line 50: | ||

== Cluster == | == Cluster == | ||

| − | A cluster | + | A cluster in the HPC sense refers to a collection of multiple nodes, usually connected via a network providing high bandwidth and low latency communication. Accessing a cluster is possible by connecting to its specific login nodes. |

== Node == | == Node == | ||

| + | |||

| + | [[File:Hardware_hierarchy.PNG|thumb|500px|Visualization of a typical hardware hierarchy on a cluster]] | ||

A node is an individual computer consisting of one or more sockets. | A node is an individual computer consisting of one or more sockets. | ||

| Line 48: | Line 60: | ||

=== Backend Node === | === Backend Node === | ||

| − | Backend nodes are reserved for executing memory demanding and long running applications. | + | Backend nodes (compute nodes) are reserved for executing the actual (scientific) calculation computations (memory demanding and long running applications). These compute nodes are the most powerful, but also most power consuming part of a cluster as they make up around 98% of it. Since these nodes are not directly accessible by the user, a scheduler manages their access. In order to run on these nodes, a batch job needs to be submitted to the batch system via a scheduler specific command. |

=== Copy Node === | === Copy Node === | ||

| − | Copy nodes are reserved for | + | Copy nodes are reserved for transferring data to or from a cluster. They usually offer a better connection than other nodes and minimize the disturbance of other users on the system. Depending on the facility, software installed on these nodes may differ from other ones due to their restricted use case, though not every facility chooses to install a designated copy node at all. As an alternative [[#Login Node|login nodes]] may be used to move data between systems. |

=== Frontend Node === | === Frontend Node === | ||

| Line 61: | Line 73: | ||

Login nodes are reserved for connecting to the cluster of a facility. Most of the time they can also be used for testing and performing interactive tasks (e.g. the analysis of previously collected application profiles). These test runs should generally not exceed execution times of just a few minutes and may only be used to verify that your software is running correctly on the system and its environment before submitting batch jobs to the batch system. | Login nodes are reserved for connecting to the cluster of a facility. Most of the time they can also be used for testing and performing interactive tasks (e.g. the analysis of previously collected application profiles). These test runs should generally not exceed execution times of just a few minutes and may only be used to verify that your software is running correctly on the system and its environment before submitting batch jobs to the batch system. | ||

| + | |||

| + | == Central Processing Unit (CPU) == | ||

| + | |||

| + | The term "CPU" is widely used in the field of HPC, yet it lacks a precise definition. It is mostly used to describe the concrete hardware architecture of a node, but should generally be avoided due to possible misunderstandings and ambiguities - see below. | ||

== Socket == | == Socket == | ||

| − | A socket is the physical package | + | A socket is the physical package (processor) which contains multiple cores sharing the same memory. |

== Core == | == Core == | ||

| − | A core | + | A core is the smallest unit of computing, having one or more hardware threads (if the hardware setting ''Hyperthreading'' is configured) and is responsible for executing instructions. In the "pre multicore" era, a processor had just one core. |

== Thread == | == Thread == | ||

| − | + | ; Hardware Threads | |

| + | : If the same processor core can (and is configured to) execute more than one execution path in parallel (''Hyperthreading'', a hardware setting). | ||

| + | ; Software Threads | ||

| + | : A process may have several threads, all sharing the same memory address space, but each thread having its own stack (''Multithreading'', eg. via OpenMP). Threads can be seen as "parallel execution pathes" ''within the same program'' (eg. to work through different and independant data arrays in parallel). | ||

| + | Software threads are not dependent on hardware threads -- you can run multithreaded applications on systems without Hyperthreading. | ||

| − | == | + | == Random Access Memory (RAM) == |

| − | + | [[File:Memory_hierarchy.PNG|thumb|500px|Visualization of the memory hierarchy, a.k.a. the memory pyramid]] | |

| − | + | The RAM (a.k.a main memory) is used as working memory for the cores. RAM is ''volatile'' memory, losing all content when the power is lost or switched off. | |

| + | In general, RAM is shared between all sockets on a node (all sockets can access all RAM). In NUMA however, a socket might have access to certain RAM areas only be going through other sockets. | ||

| − | The | + | The operating system separates all processes' address space, and also takes care of removing/deleting the content after a process ends. |

== Cache == | == Cache == | ||

| − | A cache is a relatively small amount of fast memory (compared to RAM), on the | + | A cache is a relatively small amount of very fast memory (compared to RAM), on the processor chip (die). It is used to fetch and hold data from the main memory near to the cores working on them, to allow faster access than RAM. |

| + | |||

| + | A modern processor has three cache levels: L1 and L2 are local to each core, while L3 (or ''Last Level Cache'' (LLC)) is shared among all cores of a CPU. | ||

| + | |||

| + | == NUMA == | ||

| + | '''N'''on-'''U'''niform '''M'''emory '''A'''ccess is a hardware architecture by which the main memory chips are not connected equally to all sockets. For example, socket 1 has a direct connection to banks 1 to 8, whereas socket 2 is closely connected to banks 8-16. Accessing the non-local memory chips (via the other socket) incurs a (small) performance penalty. | ||

| + | |||

| + | For HPC applications, it can thus be of advantage to "pin" certain cores to the application, so as to avoid the execution thread "hopping" between sockets and thus having varying access times to their data. | ||

== Scalability == | == Scalability == | ||

| + | Scalability describes how well an application can use an increasing amount of hardware resources. | ||

| + | Good scalability in general means reduced runtimes when more and more cores are used to solve the same or larger problems. | ||

| + | |||

| + | Typically, applications will hit an upper limit of cores beyond they don't scale further, ie. more cores don't yield lower runtimes (or even increase it again). This is mainly due to the increasing amount of arbitration and communication necessary to coordinate the working cores. | ||

| + | |||

| + | However, good scalability can also imply that the execution time remains the same, when the problem size grows similarly to the hardware resources. | ||

| + | |||

| + | ==== Strong Scalability ==== | ||

| + | By involving more and more processor cores to solve the '''same''' problem (size), the application still achieves reduced runtimes. | ||

| + | |||

| + | ==== Weak Scalability ==== | ||

| + | By involving more and more processor cores, the application can tackle '''larger''' problems (size), though you can no longer achieve reduced runtimes on the '''same''' problem (size). | ||

| − | + | == Scheduler == | |

| + | The [[Scheduling_Basics|scheduler]] is the administrative heart of any HPC cluster. Like a dispatcher, it receives batch jobs submitted by the users, arbitrating and coordinating their placement and execution on various compute nodes.<br /> | ||

| + | The batch script to be submitted can contain directives (pragmas) to control job placement (eg. "put this job on any node with at least 16 cores and 32 GByte main memory" or "put this job on any node with a graphics card (GPU)". | ||

Latest revision as of 15:32, 1 October 2019

Unix

Unix describes a family of operating systems. Popular representatives include Ubuntu, CentOS and even MacOS, although the latter is not common on HPC systems. Main key features are its shell and file system.

File System

The file system allows for a hierarchical (directory or folder) structure of all data (files). On Unix-based systems, the top directory is / (the "root" directory), from which all other directories branch off into a tree-like structure.

Most of the time you will be working in directories starting with /home/<username>, which represents the user's home directory. All directories and files below /home/<username> can be freely modified at will by the owner.

Local filesystems

... exist only on a node and cannot be read nor written to from other nodes.

Examples are ext3/4, xfs and btrfs in Linux.

... can be read and written to by all compute nodes in the cluster. A file created by any node can immediately be seen by any other node. Sometimes also known as global or cluster file system.

Examples are the Network File System NFS, Lustre or IBM's GPFS (a.k.a. "Spectrum Scale").

Environment Variables

An environment variable is a dynamic object on a computer, which stores a value. On Unix-based operating systems (bash) you can:

- set the value of a variable with:

export <variable-name>=<value> - read the value of a variable with:

echo $<variable-name>

Environment variables can be referenced by software (or the user) to get or set information about the system or the environment. See below for a few examples of environment variables, which might give you an idea for their use and usefulness.

| Environment Variable | Content |

|---|---|

$USER

|

your current username |

$PWD

|

the directory you are currently in |

$HOSTNAME

|

hostname of the computer you are on |

$HOME

|

your home directory |

$PATH

|

list of directories searched for when a command is executed |

Cluster

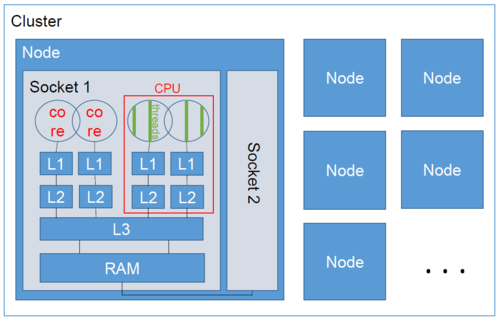

A cluster in the HPC sense refers to a collection of multiple nodes, usually connected via a network providing high bandwidth and low latency communication. Accessing a cluster is possible by connecting to its specific login nodes.

Node

A node is an individual computer consisting of one or more sockets.

Backend Node

Backend nodes (compute nodes) are reserved for executing the actual (scientific) calculation computations (memory demanding and long running applications). These compute nodes are the most powerful, but also most power consuming part of a cluster as they make up around 98% of it. Since these nodes are not directly accessible by the user, a scheduler manages their access. In order to run on these nodes, a batch job needs to be submitted to the batch system via a scheduler specific command.

Copy Node

Copy nodes are reserved for transferring data to or from a cluster. They usually offer a better connection than other nodes and minimize the disturbance of other users on the system. Depending on the facility, software installed on these nodes may differ from other ones due to their restricted use case, though not every facility chooses to install a designated copy node at all. As an alternative login nodes may be used to move data between systems.

Frontend Node

Synonym for login node.

Login Node

Login nodes are reserved for connecting to the cluster of a facility. Most of the time they can also be used for testing and performing interactive tasks (e.g. the analysis of previously collected application profiles). These test runs should generally not exceed execution times of just a few minutes and may only be used to verify that your software is running correctly on the system and its environment before submitting batch jobs to the batch system.

Central Processing Unit (CPU)

The term "CPU" is widely used in the field of HPC, yet it lacks a precise definition. It is mostly used to describe the concrete hardware architecture of a node, but should generally be avoided due to possible misunderstandings and ambiguities - see below.

Socket

A socket is the physical package (processor) which contains multiple cores sharing the same memory.

Core

A core is the smallest unit of computing, having one or more hardware threads (if the hardware setting Hyperthreading is configured) and is responsible for executing instructions. In the "pre multicore" era, a processor had just one core.

Thread

- Hardware Threads

- If the same processor core can (and is configured to) execute more than one execution path in parallel (Hyperthreading, a hardware setting).

- Software Threads

- A process may have several threads, all sharing the same memory address space, but each thread having its own stack (Multithreading, eg. via OpenMP). Threads can be seen as "parallel execution pathes" within the same program (eg. to work through different and independant data arrays in parallel).

Software threads are not dependent on hardware threads -- you can run multithreaded applications on systems without Hyperthreading.

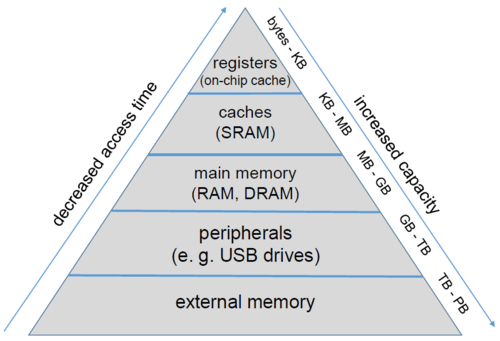

Random Access Memory (RAM)

The RAM (a.k.a main memory) is used as working memory for the cores. RAM is volatile memory, losing all content when the power is lost or switched off. In general, RAM is shared between all sockets on a node (all sockets can access all RAM). In NUMA however, a socket might have access to certain RAM areas only be going through other sockets.

The operating system separates all processes' address space, and also takes care of removing/deleting the content after a process ends.

Cache

A cache is a relatively small amount of very fast memory (compared to RAM), on the processor chip (die). It is used to fetch and hold data from the main memory near to the cores working on them, to allow faster access than RAM.

A modern processor has three cache levels: L1 and L2 are local to each core, while L3 (or Last Level Cache (LLC)) is shared among all cores of a CPU.

NUMA

Non-Uniform Memory Access is a hardware architecture by which the main memory chips are not connected equally to all sockets. For example, socket 1 has a direct connection to banks 1 to 8, whereas socket 2 is closely connected to banks 8-16. Accessing the non-local memory chips (via the other socket) incurs a (small) performance penalty.

For HPC applications, it can thus be of advantage to "pin" certain cores to the application, so as to avoid the execution thread "hopping" between sockets and thus having varying access times to their data.

Scalability

Scalability describes how well an application can use an increasing amount of hardware resources. Good scalability in general means reduced runtimes when more and more cores are used to solve the same or larger problems.

Typically, applications will hit an upper limit of cores beyond they don't scale further, ie. more cores don't yield lower runtimes (or even increase it again). This is mainly due to the increasing amount of arbitration and communication necessary to coordinate the working cores.

However, good scalability can also imply that the execution time remains the same, when the problem size grows similarly to the hardware resources.

Strong Scalability

By involving more and more processor cores to solve the same problem (size), the application still achieves reduced runtimes.

Weak Scalability

By involving more and more processor cores, the application can tackle larger problems (size), though you can no longer achieve reduced runtimes on the same problem (size).

Scheduler

The scheduler is the administrative heart of any HPC cluster. Like a dispatcher, it receives batch jobs submitted by the users, arbitrating and coordinating their placement and execution on various compute nodes.

The batch script to be submitted can contain directives (pragmas) to control job placement (eg. "put this job on any node with at least 16 cores and 32 GByte main memory" or "put this job on any node with a graphics card (GPU)".