Difference between revisions of "Binding/Pinning"

(Fix OMP_PLACES examples) |

|||

| (48 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| + | [[Category:HPC-User]] | ||

== Basics == | == Basics == | ||

| − | + | Pinning threads for shared-memory [[Parallel_Programming|parallelism]] or binding processes for distributed-memory parallelism is an advanced way to control how your system distributes the threads or processes across the available cores. It is important for improving the performance of your application by avoiding costly [[#RemoteMemoryAccess|remote memory accesses]] and keeping the threads or processes close to each other. Threads are "pinned" by setting certain [[OpenMP]]-related environment variables, which you can do with this command: | |

| − | + | $ export <env_variable_name>=<value> | |

| − | Pinning threads is an advanced way to control how your system distributes the threads across the available cores | + | The terms "thread pinning" and "thread affinity" as well as "process binding" and "process affinity" are used interchangeably. |

| − | The terms "thread pinning" and "thread affinity" are used interchangeably. | + | You can bind processes by specifying additional options when [[How_to_Use_MPI#How_to_Run_an_MPI_Executable|executing]] your [[MPI]] application. |

| − | + | __TOC__ | |

| − | [[File:Omp_places.png|thumb|350px|Schematic of how <code>OMP_PLACES={0}: | + | |

| + | == <span id="Pin_OMP"></span> How to Pin Threads in OpenMP == | ||

| + | |||

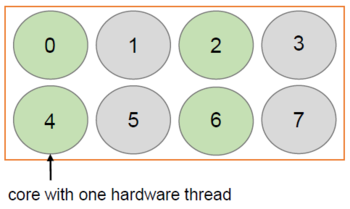

| + | [[File:Omp_places.png|thumb|350px|Schematic of how <code>OMP_PLACES={0}:4:2</code> would be interpreted]] | ||

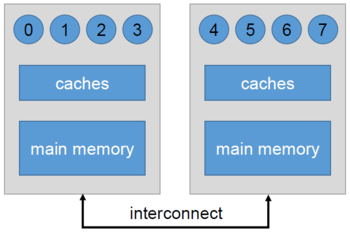

[[File:Proc_bind_close.PNG|thumb|350px|Schematic of how <code>OMP_PROC_BIND=close</code> would be interpreted on a system comprising 2 nodes with 4 hardware threads each]] | [[File:Proc_bind_close.PNG|thumb|350px|Schematic of how <code>OMP_PROC_BIND=close</code> would be interpreted on a system comprising 2 nodes with 4 hardware threads each]] | ||

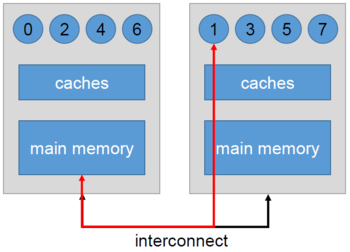

| − | [[File:Proc_bind_spread.PNG|thumb|350px|Schematic of <code>OMP_PROC_BIND=spread</code> and an remote memory access | + | [[File:Proc_bind_spread.PNG|thumb|350px|<span id="RemoteMemoryAccess"></span>Schematic of <code>OMP_PROC_BIND=spread</code> and an remote memory access of thread 1 accessing the other socket's memory (e.g. thread 0 and thread 1 work on the same data)]] |

| − | OMP_PLACES is employed to specify places on the machine where the threads are put. However, this variable on its own does not determine thread pinning completely, because your system still won't know in what pattern to assign the threads to the given places. Therefore, you also need to set OMP_PROC_BIND. | + | <code>OMP_PLACES</code> is employed to specify places on the machine where the threads are put. However, this variable on its own does not determine thread pinning completely, because your system still won't know in what pattern to assign the threads to the given places. Therefore, you also need to set <code>OMP_PROC_BIND</code>. |

| − | OMP_PROC_BIND specifies a binding policy which basically sets criteria by which the threads are distributed. | + | <code>OMP_PROC_BIND</code> specifies a binding policy which basically sets criteria by which the threads are distributed. |

If you want to get a schematic overview of your cluster's hardware, e. g. to figure out how many hardware threads there are, type: <code>$ lstopo</code>. | If you want to get a schematic overview of your cluster's hardware, e. g. to figure out how many hardware threads there are, type: <code>$ lstopo</code>. | ||

| − | === OMP_PLACES === | + | === <code>OMP_PLACES</code> === |

This variable can hold two kinds of values: a name specifying (hardware) places, or a list that marks places. | This variable can hold two kinds of values: a name specifying (hardware) places, or a list that marks places. | ||

| Line 35: | Line 39: | ||

|} | |} | ||

| − | In order to define specific places by an interval, OMP_PLACES can be set to <code><lowerbound>:<length>:<stride></code>. | + | In order to define specific places by an interval, <code>OMP_PLACES</code> can be set to <code><lowerbound>:<length>:<stride></code>. |

All of these three values are non-negative integers and must not exceed your system's bounds. The value of <code><lowerbound></code> can be defined as a list of hardware threads. As an interval, <code><lowerbound></code> has this format: <code>{<starting_point>:<length>}</code> that can be a single place, or a place that holds several hardware threads, which is indicated by <code><length></code>. | All of these three values are non-negative integers and must not exceed your system's bounds. The value of <code><lowerbound></code> can be defined as a list of hardware threads. As an interval, <code><lowerbound></code> has this format: <code>{<starting_point>:<length>}</code> that can be a single place, or a place that holds several hardware threads, which is indicated by <code><length></code>. | ||

{| class="wikitable" style="width:60%;" | {| class="wikitable" style="width:60%;" | ||

| − | | Example hardware || OMP_PLACES || Places | + | | Example hardware || Desired binding || <code>OMP_PLACES</code> || Places |

|- | |- | ||

| − | | 24 cores with one hardware thread each | + | | 24 cores with one hardware thread each || starting at core 0 use every 2nd core || <code>{0}:12:2</code> or <code>{0:1}:12:2</code> || <code>{0}, {2}, {4}, {6}, {8}, {10}, {12}, {14}, {16}, {18}, {20}, {22}</code> |

|- | |- | ||

| − | | 12 cores with two hardware threads each | + | | 12 cores with two hardware threads each || starting at the first two hardware threads on the first core ({0,1}) use every 4th core || <code>{0,1}:6:4</code> or <code>{0:2}:6:4</code> || <code>{0,1}, {4,5}, {8,9}, {12,13}, {16,17}, {20,21}</code> |

|} | |} | ||

| − | You can also determine these places with a comma-separated list. Say there are 8 cores available with one hardware thread each, and you would like to execute your application on the first four cores, you could define this: <code>$ export OMP_PLACES={0, 1, 2, 3}</code> | + | You can also determine these places with a comma-separated list. Say there are 8 cores available with one hardware thread each, and you would like to execute your application on the first four cores, you could define this: <code>$ export OMP_PLACES="{0,1,2,3}"</code> |

| − | === OMP_PROC_BIND === | + | === <code>OMP_PROC_BIND</code> === |

| − | Now that you have set OMP_PROC_BIND, you can now define the order in which the places should be assigned. This is especially useful for NUMA systems because some threads may have to access remote memory, which will slow your application down significantly. If OMP_PROC_BIND is not set, your system will distribute the threads across the nodes and cores randomly. | + | Now that you have set <code>OMP_PROC_BIND</code>, you can now define the order in which the places should be assigned. This is especially useful for NUMA systems (see [[#References|references]] below) because some threads may have to access remote memory, which will slow your application down significantly. If <code>OMP_PROC_BIND</code> is not set, your system will distribute the threads across the nodes and cores randomly. |

{| class="wikitable" style="width:60%;" | {| class="wikitable" style="width:60%;" | ||

| Line 66: | Line 70: | ||

|} | |} | ||

| + | == <span id="Pin_MPI"></span> Options for Binding in Open MPI == | ||

| + | |||

| + | Binding processes to certain processors can be done by specifying the options below when executing a program. This is a more advanced way of running an application and also requires knowledge about your system's architecture, e. g. how many cores there are (for an overview of your hardware topology, use <code>$ lstopo</code>). If none of these options are given, default values are set. | ||

| + | By overriding default values with the ones specified, you may be able to improve the performance of your application, if your system distributes them in a suboptimal way per default. | ||

| + | |||

| + | {| class="wikitable" style="width: 100%;" | ||

| + | | Option || Function || Explanation | ||

| + | |- | ||

| + | | --bind-to <arg> || bind to the processors associated with hardware component; <code><arg></code> can be one of: none, hwthread, core, l1cache, l2cache, l3cache, socket, numa, board; default value: <code>core</code> || e. g.: in case of <code>l3cache</code> the processes will be bound to those processors that share the same L3 cache | ||

| + | |- | ||

| + | | --map-by <arg> || map to the specified hardware component. <code><arg></code> can be one of: slot, hwthread, core, L1cache, L2cache, L3cache, socket, numa, board, node, sequential, distance, and ppr; default value: <code>socket</code> || if <code>--map-by socket</code> with <code>--bind-to core</code> is used and the program is launched with 4 processes on a two socket machine, process 0 is bound to the first core on socket 0, process 1 is bound to the first core on socket 1, process 2 is bound to the second core on socket 0 and process 3 is bound to the second core on socket 1. | ||

| + | |- | ||

| + | | --report-bindings || print any bindings for launched processes to the console || sample output matching the example for <code>--map-by</code>: | ||

| + | <syntaxhighlight lang="bash"> | ||

| + | [myhost] MCW rank 0 bound to socket 0[core 0[hwt 0-1]]: [BB/../../../../..][../../../../../..] | ||

| + | [myhost] MCW rank 1 bound to socket 1[core 6[hwt 0-1]]: [../../../../../..][BB/../../../../..] | ||

| + | [myhost] MCW rank 2 bound to socket 0[core 1[hwt 0-1]]: [../BB/../../../..][../../../../../..] | ||

| + | [myhost] MCW rank 3 bound to socket 1[core 7[hwt 0-1]]: [../../../../../..][../BB/../../../..] | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | == Options for Binding in Intel MPI == | ||

| + | |||

| + | In order to bind processes using Intel MPI, users can set several environment variables to determine a binding policy. The most important ones are listed and explained below. | ||

| + | You can either export them to your environment, or specify them as an option when running your application, like this: | ||

| + | $ mpiexec -genv <env_variable>=<value> [...] -n <num_procs> <application> | ||

| + | |||

| + | {| class="wikitable" style="width: 70%;" | ||

| + | | Variable || Explanation | ||

| + | |- | ||

| + | | <code>I_MPI_PIN=<arg></code> || these values for arg enable binding: enable, yes, on, 1, and these disable binding: disable, no, off, 0 | ||

| + | |- | ||

| + | | <code>I_MPI_PIN_MODE=<pinmode></code> || if set to mpd or pm, the available process manager will take care of the pinning, if pinmode=lib, the Intel MPI library pins the processes (per default, pinmode is set to "pm") | ||

| + | |- | ||

| + | | <code>I_MPI_PIN_CELL=<cell></code> || defines granularity of pinning resolution, where "unit" specifies a logical CPU and "core" a physical core; if <code>I_MPI_PIN_DOMAIN</code> is set, the cell will be "unit"; if you are using <code>I_MPI_PIN_PROCESSOR_LIST</code>, cell can be either, depending on the number of processes and cores available on your machine | ||

| + | |- | ||

| + | | <code>I_MPI_PIN_DOMAIN=<arg></code> || defines a separate set of CPUs (domain) on a single node; one process will be started per domain; targeted mainly at hybrid programs; arg can be one of: core, socket, node, cache1/2/3, cache; to define a custom pattern, put: <code><size>[:<layout>]</code> with size as the number of CPUs per domain, and layout as the distance inbetween domain members (e. g. scatter, compact) | ||

| + | |- | ||

| + | | <code>I_MPI_PIN_ORDER=<order></code> || order in which the MPI processes will be associated with the domains; <code>order</code> can be one of: range, scatter, compact, spread, bunch; <code>compact</code>, for example, will put those domains next to each other that share the same resources | ||

| + | |- | ||

| + | | <code>I_MPI_PIN_PROCESSOR_LIST=<list></code> || <code>list</code> can be set to a list of CPUs to start processes on, e. g. <code>2,4-6</code> which will select CPUs 2, 4, 5, 6. It can also be set to a procset, which can be set to all, allcores, allsocks, and the processes can be mapped in more detail by passing more parameters (see [[#References|references]] below) | ||

| + | |} | ||

== References == | == References == | ||

| Line 71: | Line 117: | ||

[http://pages.tacc.utexas.edu/~eijkhout/pcse/html/omp-affinity.html Thread affinity in OpenMP] | [http://pages.tacc.utexas.edu/~eijkhout/pcse/html/omp-affinity.html Thread affinity in OpenMP] | ||

| − | [https://docs.oracle.com/cd/E60778_01/html/E60751/goztg.html More information on OMP_PLACES and OMP_PROC_BIND] | + | [https://docs.oracle.com/cd/E60778_01/html/E60751/goztg.html More information on <code>OMP_PLACES</code> and <code>OMP_PROC_BIND</code>] |

| + | |||

| + | [https://doc.itc.rwth-aachen.de/download/attachments/35947076/03_OpenMPNumaSimd.pdf Introduction to OpenMP from PPCES (@RWTH Aachen) Part 3: NUMA & SIMD] | ||

| + | |||

| + | [https://www.open-mpi.org/faq/?category=tuning#using-paffinity-v1.4 FAQ about process affinity in Open MPI] | ||

| + | |||

| + | [https://software.intel.com/sites/default/files/Reference_Manual_1.pdf Reference manual for Intel MPI Libraray (go to "Binding Options")] | ||

| + | |||

| + | [https://software.intel.com/en-us/mpi-developer-reference-windows-environment-variables-for-process-pinning Overview of environment variables for binding in Intel MPI] | ||

Latest revision as of 15:35, 23 March 2022

Basics

Pinning threads for shared-memory parallelism or binding processes for distributed-memory parallelism is an advanced way to control how your system distributes the threads or processes across the available cores. It is important for improving the performance of your application by avoiding costly remote memory accesses and keeping the threads or processes close to each other. Threads are "pinned" by setting certain OpenMP-related environment variables, which you can do with this command:

$ export <env_variable_name>=<value>

The terms "thread pinning" and "thread affinity" as well as "process binding" and "process affinity" are used interchangeably. You can bind processes by specifying additional options when executing your MPI application.

How to Pin Threads in OpenMP

OMP_PLACES is employed to specify places on the machine where the threads are put. However, this variable on its own does not determine thread pinning completely, because your system still won't know in what pattern to assign the threads to the given places. Therefore, you also need to set OMP_PROC_BIND.

OMP_PROC_BIND specifies a binding policy which basically sets criteria by which the threads are distributed.

If you want to get a schematic overview of your cluster's hardware, e. g. to figure out how many hardware threads there are, type: $ lstopo.

OMP_PLACES

This variable can hold two kinds of values: a name specifying (hardware) places, or a list that marks places.

| Abstract name | Meaning |

threads |

a place is a single hardware thread, i. e. the hyperthreading will be ignored |

cores |

a place is a single core with its corresponding amount of hardware threads |

sockets |

a place is a single socket |

In order to define specific places by an interval, OMP_PLACES can be set to <lowerbound>:<length>:<stride>.

All of these three values are non-negative integers and must not exceed your system's bounds. The value of <lowerbound> can be defined as a list of hardware threads. As an interval, <lowerbound> has this format: {<starting_point>:<length>} that can be a single place, or a place that holds several hardware threads, which is indicated by <length>.

| Example hardware | Desired binding | OMP_PLACES |

Places |

| 24 cores with one hardware thread each | starting at core 0 use every 2nd core | {0}:12:2 or {0:1}:12:2 |

{0}, {2}, {4}, {6}, {8}, {10}, {12}, {14}, {16}, {18}, {20}, {22}

|

| 12 cores with two hardware threads each | starting at the first two hardware threads on the first core ({0,1}) use every 4th core | {0,1}:6:4 or {0:2}:6:4 |

{0,1}, {4,5}, {8,9}, {12,13}, {16,17}, {20,21}

|

You can also determine these places with a comma-separated list. Say there are 8 cores available with one hardware thread each, and you would like to execute your application on the first four cores, you could define this: $ export OMP_PLACES="{0,1,2,3}"

OMP_PROC_BIND

Now that you have set OMP_PROC_BIND, you can now define the order in which the places should be assigned. This is especially useful for NUMA systems (see references below) because some threads may have to access remote memory, which will slow your application down significantly. If OMP_PROC_BIND is not set, your system will distribute the threads across the nodes and cores randomly.

| Value | Function |

true |

the threads should not be moved |

false |

the threads can be moved |

master |

worker threads are in the same partition as the master |

close |

worker threads are close to the master in contiguous partitions, e. g. if the master is occupying hardware thread 0, worker 1 will be placed on hw thread 1, worker 2 on hw thread 2 and so on |

spread |

workers are spread across the available places to maximize the space inbetween two neighbouring threads |

Options for Binding in Open MPI

Binding processes to certain processors can be done by specifying the options below when executing a program. This is a more advanced way of running an application and also requires knowledge about your system's architecture, e. g. how many cores there are (for an overview of your hardware topology, use $ lstopo). If none of these options are given, default values are set.

By overriding default values with the ones specified, you may be able to improve the performance of your application, if your system distributes them in a suboptimal way per default.

| Option | Function | Explanation |

| --bind-to <arg> | bind to the processors associated with hardware component; <arg> can be one of: none, hwthread, core, l1cache, l2cache, l3cache, socket, numa, board; default value: core |

e. g.: in case of l3cache the processes will be bound to those processors that share the same L3 cache

|

| --map-by <arg> | map to the specified hardware component. <arg> can be one of: slot, hwthread, core, L1cache, L2cache, L3cache, socket, numa, board, node, sequential, distance, and ppr; default value: socket |

if --map-by socket with --bind-to core is used and the program is launched with 4 processes on a two socket machine, process 0 is bound to the first core on socket 0, process 1 is bound to the first core on socket 1, process 2 is bound to the second core on socket 0 and process 3 is bound to the second core on socket 1.

|

| --report-bindings | print any bindings for launched processes to the console | sample output matching the example for --map-by:

[myhost] MCW rank 0 bound to socket 0[core 0[hwt 0-1]]: [BB/../../../../..][../../../../../..]

[myhost] MCW rank 1 bound to socket 1[core 6[hwt 0-1]]: [../../../../../..][BB/../../../../..]

[myhost] MCW rank 2 bound to socket 0[core 1[hwt 0-1]]: [../BB/../../../..][../../../../../..]

[myhost] MCW rank 3 bound to socket 1[core 7[hwt 0-1]]: [../../../../../..][../BB/../../../..]

|

Options for Binding in Intel MPI

In order to bind processes using Intel MPI, users can set several environment variables to determine a binding policy. The most important ones are listed and explained below. You can either export them to your environment, or specify them as an option when running your application, like this:

$ mpiexec -genv <env_variable>=<value> [...] -n <num_procs> <application>

| Variable | Explanation |

I_MPI_PIN=<arg> |

these values for arg enable binding: enable, yes, on, 1, and these disable binding: disable, no, off, 0 |

I_MPI_PIN_MODE=<pinmode> |

if set to mpd or pm, the available process manager will take care of the pinning, if pinmode=lib, the Intel MPI library pins the processes (per default, pinmode is set to "pm") |

I_MPI_PIN_CELL=<cell> |

defines granularity of pinning resolution, where "unit" specifies a logical CPU and "core" a physical core; if I_MPI_PIN_DOMAIN is set, the cell will be "unit"; if you are using I_MPI_PIN_PROCESSOR_LIST, cell can be either, depending on the number of processes and cores available on your machine

|

I_MPI_PIN_DOMAIN=<arg> |

defines a separate set of CPUs (domain) on a single node; one process will be started per domain; targeted mainly at hybrid programs; arg can be one of: core, socket, node, cache1/2/3, cache; to define a custom pattern, put: <size>[:<layout>] with size as the number of CPUs per domain, and layout as the distance inbetween domain members (e. g. scatter, compact)

|

I_MPI_PIN_ORDER=<order> |

order in which the MPI processes will be associated with the domains; order can be one of: range, scatter, compact, spread, bunch; compact, for example, will put those domains next to each other that share the same resources

|

I_MPI_PIN_PROCESSOR_LIST=<list> |

list can be set to a list of CPUs to start processes on, e. g. 2,4-6 which will select CPUs 2, 4, 5, 6. It can also be set to a procset, which can be set to all, allcores, allsocks, and the processes can be mapped in more detail by passing more parameters (see references below)

|

References

More information on OMP_PLACES and OMP_PROC_BIND

Introduction to OpenMP from PPCES (@RWTH Aachen) Part 3: NUMA & SIMD

FAQ about process affinity in Open MPI

Reference manual for Intel MPI Libraray (go to "Binding Options")