Difference between revisions of "Performance profiling"

| (36 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| + | [[Category:HPC-Developer]] | ||

| + | [[Category:Benchmarking]]<nowiki /> | ||

| + | |||

| + | In a performance engineering context performance profiling means to relate performance metric measurements to source code execution. Data sources are typically either operating system, execution environments or measurement facilities in the hardware. The following explanations focus on hardware performance monitoring (HPM) based metrics. | ||

| + | |||

== Introduction == | == Introduction == | ||

| − | + | Every modern processor has support for so called hardware performance monitoring (HPM) units. To use HPM units dedicated profiling tools need to be used. HPM metrics give a very detailed view on software-hardware interaction and add almost no overhead. Every serious performance engineering effort should use a HPM tool for profiling. A very good overview about HPM capabilities of many X86 processor architectures is given in the [https://github.com/RRZE-HPC/likwid/wiki Likwid Wiki]. | |

| − | HPM units consist of programmable counters in different parts of the chip. Every processor | + | HPM units consist of programmable counters in different parts of the chip. Every Intel processor for example has at least 4 general purpose counters per core plus many more counters in different parts of the uncore (the part of the chip that is shared by multiple cores). |

There are two basic ways to use HPM units: | There are two basic ways to use HPM units: | ||

| − | * End-to-end measurements: A counter is programmed and started. It measures everything executed on its part of the hardware. The counter can be read while running or after being stopped. The advantage is that no overhead is introduced during the measurement. The measurement is very accurate but only averages | + | * End-to-end measurements: A counter is programmed and started. It measures everything executed on its part of the hardware. The counter can be read while running or after being stopped. The advantage is that no overhead is introduced during the measurement. The measurement is very accurate but only provides averages. To measure code regions an instrumentation API has to be used and the code has to be pinned to specific processors. Also only one fixed event set can be measured at a time. The Likwid tool [https://github.com/RRZE-HPC/likwid/wiki/likwid-perfctr likwid-perfctr] is based on this approach. |

| − | * Sampling based measurements: Events are related to source code by statistical sampling. Counters are configured and started and when they exceed | + | * Sampling based measurements: Events are related to source code by statistical sampling. Counters are configured and started and when they exceed a configured threshold an interrupt is triggered reading out the program counter. This information is stored and later analysed. Sampling based tools introduce overhead by triggering interrupts and additional book keeping during the measurement. There is also the possibility of measurement errors due to statistical errors. Advantages are that a code does not need to be pinned nor instrumented. The complete application can be measured and analysed in one run. Also measuring multiple event sets is no problem. Most advanced tools employ sampling. Sampling functionality requires extensive kernel support but is accessible using the Linux Perf interface. |

| − | + | It is often difficult for software developers to choose the correct set of raw events to accurately measure the interesting high-level metrics. Most of those metrics cannot be directly measured, but require a set of raw events, from which derived metrics can be computed. Also processor vendors usually take no responsibility for wrong event counts. One has to trust the tool to choose the right event sets and employ validation of the results. | |

| − | == | + | == Recommended usage == |

| − | HPM allows to measure resource | + | HPM allows to measure resource utilization, executed instruction decomposition, as well as diagnostic analysis of software-hardware interaction. We recommend to measure resource utilisation and instruction decomposition first for all regions at the top of the runtime profiling list. |

| − | Metrics to measure (Typical metric in parentheses): | + | Metrics to measure (Typical metric units in parentheses): |

* Operation throughput (Flops/s) | * Operation throughput (Flops/s) | ||

| Line 33: | Line 38: | ||

=== likwid-perfctr === | === likwid-perfctr === | ||

| − | The [https://github.com/RRZE-HPC/likwid Likwid tools] provide the command line tool [https://github.com/RRZE-HPC/likwid/wiki/likwid-perfctr likwid-perfctr] for measuring HPM events as well as other data sources as e.g. RAPL counters. likwid-perfctr supports all modern X86 architectures as well as early support for Power and ARM processors. It is available for the Linux operating system. Because it performs end-to-end measurements | + | The [https://github.com/RRZE-HPC/likwid Likwid tools] provide the command line tool [https://github.com/RRZE-HPC/likwid/wiki/likwid-perfctr likwid-perfctr] for measuring HPM events as well as other data sources as e.g. RAPL counters. likwid-perfctr supports all modern X86 architectures as well as early support for Power and ARM processors. It is only available for the Linux operating system. Because it performs end-to-end measurements it requires to pin the application to cores, affinity control is already built into the tool through. Some notable features are: |

* Lightweight tool with low learning curve | * Lightweight tool with low learning curve | ||

| Line 43: | Line 48: | ||

* Marker API can also be used as very accurate runtime profiler | * Marker API can also be used as very accurate runtime profiler | ||

* Multiple modes: | * Multiple modes: | ||

| − | + | ** Wrapper mode (end to end measurement of application run) | |

| − | + | ** Stethoscope mode (measure for specified duration events on set of cores) | |

| − | + | ** Timeline mode (Time resolved measurement outputs performance metric in specified frequency (can be ms or s)) | |

| − | + | ** Marker API (Lightweight C and F90 API with region markers. This what is usually used in full scale production codes) | |

| + | |||

| + | All recommended metrics can be measured using the MEM_DP/MEM_SP, BRANCH, DATA, L2 and L3 performance groups. | ||

| + | |||

| + | === Oracle Sampling Collector and Performance Analyzer === | ||

| + | |||

| + | The Oracle Sampling Collector and the Performance analyzer are a pair of tools that can be used to collect and analyze performance data for serial or parallel applications. | ||

| + | |||

| + | Performance data including a hierarchy of function calls and hardware performance counters, for example, can be gathered by executing the program under control of the Sampling Collector: | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | $ collect <opt> a.out | ||

| + | </syntaxhighlight> | ||

| + | This will create an experiment directory '''test.1.er''' (by default) which can then be examined using the Performance Analyzer: | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | $ analyzer test.1.er | ||

| + | </syntaxhighlight> | ||

| + | More information can be found [https://docs.oracle.com/cd/E77782_01/html/E77798/index.html here]. | ||

| + | |||

| + | === Intel VTune Amplifier === | ||

| + | |||

| + | The Intel VTune Amplifier is a sophisticated commercial performance analysis tool suite. There exists a [https://software.intel.com/en-us/vtune-amplifier-help-command-line-interface command line interface] that utilises the Linux perf interface. The tool can measure OpenMP and MPI parallel applications and supports multiple predefined analysis targets. | ||

| + | |||

| + | Analysis targets include: | ||

| + | |||

| + | * [https://software.intel.com/en-us/vtune-amplifier-help-running-basic-hotspots-analysis-from-the-command-line Hotspots] | ||

| + | * [https://software.intel.com/en-us/vtune-amplifier-help-running-hpc-performance-analysis-from-the-command-line HPC Performance] | ||

| + | * [https://software.intel.com/en-us/vtune-amplifier-help-running-memory-access-analysis-from-the-command-line Memory access] | ||

| + | |||

| + | Usage (hear with the `hpc-performance` target: | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | $ amplxe-cl -collect hpc-performance [-knob <knobName=knobValue>] [--] <target> | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Every target supports so called knobs that allow to adjust the functionality. An overview about supported knobs for an analysis target is available with: | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | $ amplxe-cl -help collect hpc-performance | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Help on using `amplxe-cl` with MPI is found [https://software.intel.com/en-us/vtune-amplifier-help-mpi-code-analysis here]. | ||

| + | |||

| + | === Score-P === | ||

| + | |||

| + | [[Score-P]] is an infrastructure supporting multiple other tools as backend. Targeted at large scale parallel measurements. More information can be found [https://www.vi-hps.org/projects/score-p/ here]. | ||

| + | |||

| + | === TAU === | ||

| + | |||

| + | The TAU Performance System is a portable profiling and tracing toolkit for performance analysis of parallel programs written in Fortran, C, C++, UPC, Java, Python. More infos can be found on the [http://www.cs.uoregon.edu/research/tau/home.php TAU webpage] | ||

| + | |||

| + | === HPCToolkit === | ||

| + | |||

| + | HPCToolkit is an integrated suite of tools for measurement and analysis of program performance on computers ranging from multicore desktop systems to the largest supercomputers. More infos can be found on the [http://hpctoolkit.org/ HPCToolkit webpage]. | ||

| + | |||

| + | == Example == | ||

| + | |||

| + | In this section an analysis of the HPCG 3.0 reference code is shown to illustrate the benefit of HPM profiling. The [https://www.hpcg-benchmark.org/ High Performance Conjugate Gradients (HPCG)] Benchmark project is an effort to accompany the instruction throughput limited Linpack benchmark with a memory bandwidth limited benchmark. | ||

| + | |||

| + | Benchmarking was performed on a standard Intel Ivy-Bridge EP cluster node running with 2.2GHz (2 sockets, 10 cores per socket). The frequency was pinned to the nominal frequency. | ||

| + | The code was compiled using the Intel compiler tool chain version 17.0up03. Performance profiling was done using Likwid version 4.1.2. | ||

| + | |||

| + | Options used for benchmarking: | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | HPCG_OPTS = -DHPCG_NO_OPENMP | ||

| + | CXXFLAGS = $(HPCG_DEFS) -fast -xhost | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | As can be seen the pure MPI build was used. Application benchmarking was performed with affinity controlled using [https://github.com/RRZE-HPC/likwid/wiki/Likwid-Mpirun likwid-mpirun]. HPCG uses a sparse CRS implementation for the smoother (this was revealed by static code analysis). The smoother (which is the dominating kernel in the runtime profile) has a read only dominated data access pattern. Therefore the results were compared to a sythetic load only benchmark. | ||

| + | |||

| + | The maximum sustained load bandwidth was measured using the following call to `likwid-bench`: | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | likwid-bench -t load_avx -w M0:1GB:10:1:2 -w M1:1GB:10:1:2 | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | For benchmarking `likwid-mpirun` was used to ensure pinning of processes to cores. For e.g. 3 processes running on the first three cores of one sockets the following call may be used: | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | likwid-mpirun -np 3 -nperdomain M:10 -omp intel ./xhpcg | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | Next we plot bandwidth as reported by HPCG vs bandwidth measured with the synthetic load benchmark. | ||

| + | |||

| + | [[File:Node-scaling-HPCCG.png|800px]] | ||

| + | |||

| + | Scaling from one socket to two is linear as expected. Still HPCCG only reaches roughly half of the bandwidth measured with a AVX load only benchmark. Both curves show a saturating performance behavior, less pronounced for HPCG. HPCG should reach the same saturated memory bandwidth as the synthetic load benchmark. To be sure that the numbers reported by the benchmark are correct the SYMGS loop was instrumented using the LIKWID marker API to measure the bandwidth with hardware performance monitoring. | ||

| + | |||

| + | The following changes are made to the code (only changes shown): | ||

| + | |||

| + | In `main.cpp` | ||

| + | <syntaxhighlight lang="cpp"> | ||

| + | |||

| + | #include <likwid.h> | ||

| + | |||

| + | int main(int argc, char * argv[]) { | ||

| + | #ifndef HPCG_NO_MPI | ||

| + | MPI_Init(&argc, &argv); | ||

| + | #endif | ||

| + | |||

| + | LIKWID_MARKER_INIT; | ||

| + | LIKWID_MARKER_THREADINIT; | ||

| + | |||

| + | ... | ||

| + | |||

| + | LIKWID_MARKER_CLOSE; | ||

| + | |||

| + | // Finish up | ||

| + | #ifndef HPCG_NO_MPI | ||

| + | MPI_Finalize(); | ||

| + | #endif | ||

| + | return 0; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | and in `ComputeSYMGS_ref.cpp` | ||

| + | <syntaxhighlight lang="cpp"> | ||

| + | #include <likwid.h> | ||

| + | ... | ||

| + | LIKWID_MARKER_START("SYMGS"); | ||

| + | for (local_int_t i=0; i< nrow; i++) { | ||

| + | ... | ||

| + | } | ||

| + | LIKWID_MARKER_STOP("SYMGS"); | ||

| + | |||

| + | return 0; | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | The code was again executed using `likwid-mpirun` which can be used as a wrapper to likwid-perfctr. Measurement was performed using one process, the `-m` indicates that the code is instrumented and we use the MEM metric group. | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | likwid-mpirun -np 1 -nperdomain M:10 -omp intel -m -g MEM ./xhpcg | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | The result is as follows: | ||

| + | <syntaxhighlight lang="sh"> | ||

| + | +-----------------------------------+-----------+ | ||

| + | | Metric | e0902:0:0 | | ||

| + | +-----------------------------------+-----------+ | ||

| + | | Runtime (RDTSC) [s] | 53.9665 | | ||

| + | | Runtime unhalted [s] | 53.5799 | | ||

| + | | Clock [MHz] | 2200.0474 | | ||

| + | | CPI | 0.8546 | | ||

| + | | Memory read bandwidth [MBytes/s] | 8762.7772 | | ||

| + | | Memory read data volume [GBytes] | 472.8961 | | ||

| + | | Memory write bandwidth [MBytes/s] | 209.2554 | | ||

| + | | Memory write data volume [GBytes] | 11.2928 | | ||

| + | | Memory bandwidth [MBytes/s] | 8972.0326 | | ||

| + | | Memory data volume [GBytes] | 484.1888 | | ||

| + | +-----------------------------------+-----------+ | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | The data volume is dominated by loads. Total memory bandwidth for single core execution is around 9 GB/s. This is significantly higher than the 5.8 GB/s reported by HPCG itself. | ||

| + | This means that the benchmark does not see the full bandwidth. This is an indication that not all of the data transferred from main memory is also consumed. The suspicion is that the performance pattern [[ExcessDataVolume|Excess data volume]] applies. | ||

| + | |||

| + | If you search the internet one can find a paper on optimizing HPCG by IBM. They found out that the reference data allocation actually calls new for every single line of the matrix, and the allocated size is small (number of nonzeros per row). This may lead to more memory fragmentation and could explain the lower bandwidth observed by the benchmark itself. Memory fragmentation has two consequences. Because the memory hardware prefetcher gets in the data on a per 4k page granularity it may fetch more data than actually required. To confirm the suspicion of excess data volume I introduced a memory pool with a single `new` for the overall matrix and just set the pointers inside the pool for every line of the matrix. | ||

| + | |||

| + | The code in `./src/GenerateProblem_ref.cpp` changes from: | ||

| + | <syntaxhighlight lang="cpp"> | ||

| + | // Now allocate the arrays pointed to | ||

| + | for (local_int_t i=0; i< localNumberOfRows; ++i) { | ||

| + | mtxIndL[i] = new local_int_t[numberOfNonzerosPerRow]; | ||

| + | matrixValues[i] = new double[numberOfNonzerosPerRow]; | ||

| + | mtxIndG[i] = new global_int_t[numberOfNonzerosPerRow]; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | to | ||

| + | <syntaxhighlight lang="cpp"> | ||

| + | local_int_t* mtxIndexL_pool = new local_int_t[localNumberOfRows*numberOfNonzerosPerRow]; | ||

| + | double* matrixValues_pool = new double[localNumberOfRows*numberOfNonzerosPerRow]; | ||

| + | global_int_t* mtxIndexG_pool = new global_int_t[localNumberOfRows*numberOfNonzerosPerRow]; | ||

| + | |||

| + | // Now allocate the arrays pointed to | ||

| + | for (local_int_t i=0; i< localNumberOfRows; ++i) { | ||

| + | int offset = (numberOfNonzerosPerRow*i); | ||

| + | mtxIndL[i] = mtxIndexL_pool+offset; | ||

| + | matrixValues[i] = matrixValues_pool+offset; | ||

| + | mtxIndG[i] = mtxIndexG_pool+offset ; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | To prevent at a segvault the deallocation of memory for the array has to be commented out. The performance on one socket changes to 6 GFlops/s which is an increase of 38%. | ||

| + | The bandwidth scaling now looks like the following: | ||

| + | [[File:Node-scaling-HPCG-opt.png|800px]] | ||

| + | |||

| + | The bandwidth seen by the benchmark is close enough to the load only benchmark to confirm that the benchmark is memory bandwidth limited. To finally confirm the previous performance pattern we measure the data volume again with likwid-perfctr: | ||

| + | |||

| + | <syntaxhighlight lang="sh"> | ||

| + | +-----------------------------------+-----------+ | ||

| + | | Metric | e1102:0:0 | | ||

| + | +-----------------------------------+-----------+ | ||

| + | | Runtime (RDTSC) [s] | 33.6473 | | ||

| + | | Runtime unhalted [s] | 33.5050 | | ||

| + | | Clock [MHz] | 2199.8956 | | ||

| + | | CPI | 0.5011 | | ||

| + | | Memory read bandwidth [MBytes/s] | 8168.5019 | | ||

| + | | Memory read data volume [GBytes] | 274.8479 | | ||

| + | | Memory write bandwidth [MBytes/s] | 301.9722 | | ||

| + | | Memory write data volume [GBytes] | 10.1605 | | ||

| + | | Memory bandwidth [MBytes/s] | 8470.4741 | | ||

| + | | Memory data volume [GBytes] | 285.0084 | | ||

| + | +-----------------------------------+-----------+ | ||

| + | </syntaxhighlight> | ||

| + | The memory data volume decreased from 484GB to 285GB. This confirms that indeed the benchmark was suffering from excess data volume. After the fix the performance pattern is now [[BandwidthSaturation|Bandwidth saturation]]. | ||

| + | This example illustrates the interplay between static code review, application benchmarking and microbenchmarking as well as performance profiling. The fact that the memory bandwidth as experienced by the application is different from the actual memory bandwidth is very difficult to diagnose without a HPM tool. After the optimization the performance profile is used to confirm and quantify the effect of the optimization. | ||

| − | + | == Links and further information == | |

| − | + | * Likwid Wiki page for [https://github.com/RRZE-HPC/likwid/wiki/likwid-perfctr likwid-perfctr] | |

| + | * Intel Amplifier [https://software.intel.com/en-us/vtune-amplifier-help-command-line-interface command line tool] | ||

| + | * Wikipedia performance analysis [https://en.wikipedia.org/wiki/List_of_performance_analysis_tools tools list] | ||

| + | * VI-HPS [https://www.vi-hps.org/tools/tools.html Tool list] | ||

Latest revision as of 12:31, 19 July 2024

In a performance engineering context performance profiling means to relate performance metric measurements to source code execution. Data sources are typically either operating system, execution environments or measurement facilities in the hardware. The following explanations focus on hardware performance monitoring (HPM) based metrics.

Introduction

Every modern processor has support for so called hardware performance monitoring (HPM) units. To use HPM units dedicated profiling tools need to be used. HPM metrics give a very detailed view on software-hardware interaction and add almost no overhead. Every serious performance engineering effort should use a HPM tool for profiling. A very good overview about HPM capabilities of many X86 processor architectures is given in the Likwid Wiki.

HPM units consist of programmable counters in different parts of the chip. Every Intel processor for example has at least 4 general purpose counters per core plus many more counters in different parts of the uncore (the part of the chip that is shared by multiple cores).

There are two basic ways to use HPM units:

- End-to-end measurements: A counter is programmed and started. It measures everything executed on its part of the hardware. The counter can be read while running or after being stopped. The advantage is that no overhead is introduced during the measurement. The measurement is very accurate but only provides averages. To measure code regions an instrumentation API has to be used and the code has to be pinned to specific processors. Also only one fixed event set can be measured at a time. The Likwid tool likwid-perfctr is based on this approach.

- Sampling based measurements: Events are related to source code by statistical sampling. Counters are configured and started and when they exceed a configured threshold an interrupt is triggered reading out the program counter. This information is stored and later analysed. Sampling based tools introduce overhead by triggering interrupts and additional book keeping during the measurement. There is also the possibility of measurement errors due to statistical errors. Advantages are that a code does not need to be pinned nor instrumented. The complete application can be measured and analysed in one run. Also measuring multiple event sets is no problem. Most advanced tools employ sampling. Sampling functionality requires extensive kernel support but is accessible using the Linux Perf interface.

It is often difficult for software developers to choose the correct set of raw events to accurately measure the interesting high-level metrics. Most of those metrics cannot be directly measured, but require a set of raw events, from which derived metrics can be computed. Also processor vendors usually take no responsibility for wrong event counts. One has to trust the tool to choose the right event sets and employ validation of the results.

Recommended usage

HPM allows to measure resource utilization, executed instruction decomposition, as well as diagnostic analysis of software-hardware interaction. We recommend to measure resource utilisation and instruction decomposition first for all regions at the top of the runtime profiling list.

Metrics to measure (Typical metric units in parentheses):

- Operation throughput (Flops/s)

- Overall instruction throughput (CPI)

- Instruction counts broken down to instruction types (FP instructions, loads and stores, branch instructions, other instructions)

- Instruction counts broken down to SIMD width (scalar, SSE, AVX, AVX512 for X86). This is restricted to arithmetic instruction on most architectures.

- Data volumes and bandwidth to main memory (GB and GB/s)

- Data volumes and bandwidth to different cache levels (GB and GB/s)

Useful diagnostic metrics are:

- Clock (GHz)

- Power (W)

Tools

likwid-perfctr

The Likwid tools provide the command line tool likwid-perfctr for measuring HPM events as well as other data sources as e.g. RAPL counters. likwid-perfctr supports all modern X86 architectures as well as early support for Power and ARM processors. It is only available for the Linux operating system. Because it performs end-to-end measurements it requires to pin the application to cores, affinity control is already built into the tool through. Some notable features are:

- Lightweight tool with low learning curve

- As far as possible full event support for core and uncore counters

- Uses flexible thread group syntax for specifying which cores to measure.

- Portable performance groups with preconfigured event sets and validated derived metrics

- Offers own user space implementation using low level MSR kernel interface as well as perf backend

- Functionality is also available as part of the Likwid library API

- Marker API can also be used as very accurate runtime profiler

- Multiple modes:

- Wrapper mode (end to end measurement of application run)

- Stethoscope mode (measure for specified duration events on set of cores)

- Timeline mode (Time resolved measurement outputs performance metric in specified frequency (can be ms or s))

- Marker API (Lightweight C and F90 API with region markers. This what is usually used in full scale production codes)

All recommended metrics can be measured using the MEM_DP/MEM_SP, BRANCH, DATA, L2 and L3 performance groups.

Oracle Sampling Collector and Performance Analyzer

The Oracle Sampling Collector and the Performance analyzer are a pair of tools that can be used to collect and analyze performance data for serial or parallel applications.

Performance data including a hierarchy of function calls and hardware performance counters, for example, can be gathered by executing the program under control of the Sampling Collector:

$ collect <opt> a.out

This will create an experiment directory test.1.er (by default) which can then be examined using the Performance Analyzer:

$ analyzer test.1.er

More information can be found here.

Intel VTune Amplifier

The Intel VTune Amplifier is a sophisticated commercial performance analysis tool suite. There exists a command line interface that utilises the Linux perf interface. The tool can measure OpenMP and MPI parallel applications and supports multiple predefined analysis targets.

Analysis targets include:

Usage (hear with the `hpc-performance` target:

$ amplxe-cl -collect hpc-performance [-knob <knobName=knobValue>] [--] <target>

Every target supports so called knobs that allow to adjust the functionality. An overview about supported knobs for an analysis target is available with:

$ amplxe-cl -help collect hpc-performance

Help on using `amplxe-cl` with MPI is found here.

Score-P

Score-P is an infrastructure supporting multiple other tools as backend. Targeted at large scale parallel measurements. More information can be found here.

TAU

The TAU Performance System is a portable profiling and tracing toolkit for performance analysis of parallel programs written in Fortran, C, C++, UPC, Java, Python. More infos can be found on the TAU webpage

HPCToolkit

HPCToolkit is an integrated suite of tools for measurement and analysis of program performance on computers ranging from multicore desktop systems to the largest supercomputers. More infos can be found on the HPCToolkit webpage.

Example

In this section an analysis of the HPCG 3.0 reference code is shown to illustrate the benefit of HPM profiling. The High Performance Conjugate Gradients (HPCG) Benchmark project is an effort to accompany the instruction throughput limited Linpack benchmark with a memory bandwidth limited benchmark.

Benchmarking was performed on a standard Intel Ivy-Bridge EP cluster node running with 2.2GHz (2 sockets, 10 cores per socket). The frequency was pinned to the nominal frequency. The code was compiled using the Intel compiler tool chain version 17.0up03. Performance profiling was done using Likwid version 4.1.2.

Options used for benchmarking:

HPCG_OPTS = -DHPCG_NO_OPENMP

CXXFLAGS = $(HPCG_DEFS) -fast -xhost

As can be seen the pure MPI build was used. Application benchmarking was performed with affinity controlled using likwid-mpirun. HPCG uses a sparse CRS implementation for the smoother (this was revealed by static code analysis). The smoother (which is the dominating kernel in the runtime profile) has a read only dominated data access pattern. Therefore the results were compared to a sythetic load only benchmark.

The maximum sustained load bandwidth was measured using the following call to `likwid-bench`:

likwid-bench -t load_avx -w M0:1GB:10:1:2 -w M1:1GB:10:1:2

For benchmarking `likwid-mpirun` was used to ensure pinning of processes to cores. For e.g. 3 processes running on the first three cores of one sockets the following call may be used:

likwid-mpirun -np 3 -nperdomain M:10 -omp intel ./xhpcg

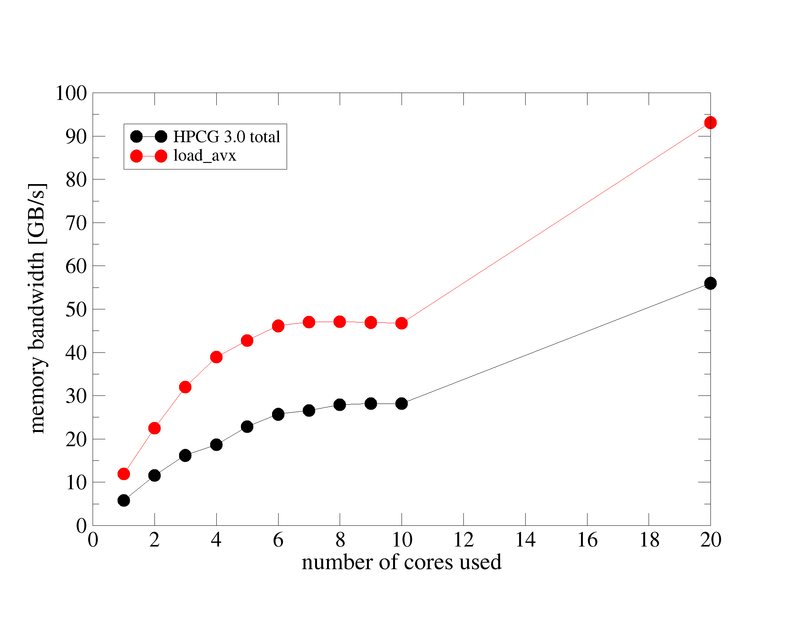

Next we plot bandwidth as reported by HPCG vs bandwidth measured with the synthetic load benchmark.

Scaling from one socket to two is linear as expected. Still HPCCG only reaches roughly half of the bandwidth measured with a AVX load only benchmark. Both curves show a saturating performance behavior, less pronounced for HPCG. HPCG should reach the same saturated memory bandwidth as the synthetic load benchmark. To be sure that the numbers reported by the benchmark are correct the SYMGS loop was instrumented using the LIKWID marker API to measure the bandwidth with hardware performance monitoring.

The following changes are made to the code (only changes shown):

In `main.cpp`

#include <likwid.h>

int main(int argc, char * argv[]) {

#ifndef HPCG_NO_MPI

MPI_Init(&argc, &argv);

#endif

LIKWID_MARKER_INIT;

LIKWID_MARKER_THREADINIT;

...

LIKWID_MARKER_CLOSE;

// Finish up

#ifndef HPCG_NO_MPI

MPI_Finalize();

#endif

return 0;

}

and in `ComputeSYMGS_ref.cpp`

#include <likwid.h>

...

LIKWID_MARKER_START("SYMGS");

for (local_int_t i=0; i< nrow; i++) {

...

}

LIKWID_MARKER_STOP("SYMGS");

return 0;

The code was again executed using `likwid-mpirun` which can be used as a wrapper to likwid-perfctr. Measurement was performed using one process, the `-m` indicates that the code is instrumented and we use the MEM metric group.

likwid-mpirun -np 1 -nperdomain M:10 -omp intel -m -g MEM ./xhpcg

The result is as follows:

+-----------------------------------+-----------+

| Metric | e0902:0:0 |

+-----------------------------------+-----------+

| Runtime (RDTSC) [s] | 53.9665 |

| Runtime unhalted [s] | 53.5799 |

| Clock [MHz] | 2200.0474 |

| CPI | 0.8546 |

| Memory read bandwidth [MBytes/s] | 8762.7772 |

| Memory read data volume [GBytes] | 472.8961 |

| Memory write bandwidth [MBytes/s] | 209.2554 |

| Memory write data volume [GBytes] | 11.2928 |

| Memory bandwidth [MBytes/s] | 8972.0326 |

| Memory data volume [GBytes] | 484.1888 |

+-----------------------------------+-----------+

The data volume is dominated by loads. Total memory bandwidth for single core execution is around 9 GB/s. This is significantly higher than the 5.8 GB/s reported by HPCG itself. This means that the benchmark does not see the full bandwidth. This is an indication that not all of the data transferred from main memory is also consumed. The suspicion is that the performance pattern Excess data volume applies.

If you search the internet one can find a paper on optimizing HPCG by IBM. They found out that the reference data allocation actually calls new for every single line of the matrix, and the allocated size is small (number of nonzeros per row). This may lead to more memory fragmentation and could explain the lower bandwidth observed by the benchmark itself. Memory fragmentation has two consequences. Because the memory hardware prefetcher gets in the data on a per 4k page granularity it may fetch more data than actually required. To confirm the suspicion of excess data volume I introduced a memory pool with a single `new` for the overall matrix and just set the pointers inside the pool for every line of the matrix.

The code in `./src/GenerateProblem_ref.cpp` changes from:

// Now allocate the arrays pointed to

for (local_int_t i=0; i< localNumberOfRows; ++i) {

mtxIndL[i] = new local_int_t[numberOfNonzerosPerRow];

matrixValues[i] = new double[numberOfNonzerosPerRow];

mtxIndG[i] = new global_int_t[numberOfNonzerosPerRow];

}

to

local_int_t* mtxIndexL_pool = new local_int_t[localNumberOfRows*numberOfNonzerosPerRow];

double* matrixValues_pool = new double[localNumberOfRows*numberOfNonzerosPerRow];

global_int_t* mtxIndexG_pool = new global_int_t[localNumberOfRows*numberOfNonzerosPerRow];

// Now allocate the arrays pointed to

for (local_int_t i=0; i< localNumberOfRows; ++i) {

int offset = (numberOfNonzerosPerRow*i);

mtxIndL[i] = mtxIndexL_pool+offset;

matrixValues[i] = matrixValues_pool+offset;

mtxIndG[i] = mtxIndexG_pool+offset ;

}

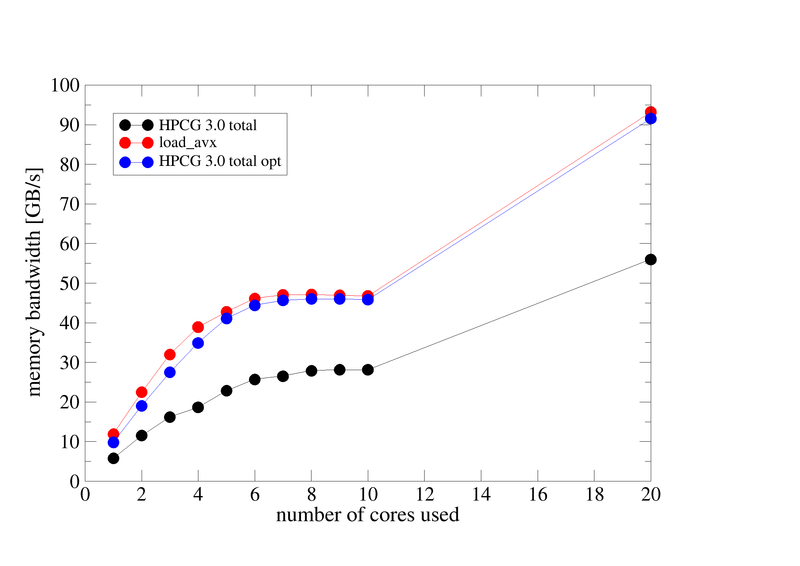

To prevent at a segvault the deallocation of memory for the array has to be commented out. The performance on one socket changes to 6 GFlops/s which is an increase of 38%.

The bandwidth scaling now looks like the following:

The bandwidth seen by the benchmark is close enough to the load only benchmark to confirm that the benchmark is memory bandwidth limited. To finally confirm the previous performance pattern we measure the data volume again with likwid-perfctr:

+-----------------------------------+-----------+

| Metric | e1102:0:0 |

+-----------------------------------+-----------+

| Runtime (RDTSC) [s] | 33.6473 |

| Runtime unhalted [s] | 33.5050 |

| Clock [MHz] | 2199.8956 |

| CPI | 0.5011 |

| Memory read bandwidth [MBytes/s] | 8168.5019 |

| Memory read data volume [GBytes] | 274.8479 |

| Memory write bandwidth [MBytes/s] | 301.9722 |

| Memory write data volume [GBytes] | 10.1605 |

| Memory bandwidth [MBytes/s] | 8470.4741 |

| Memory data volume [GBytes] | 285.0084 |

+-----------------------------------+-----------+

The memory data volume decreased from 484GB to 285GB. This confirms that indeed the benchmark was suffering from excess data volume. After the fix the performance pattern is now Bandwidth saturation. This example illustrates the interplay between static code review, application benchmarking and microbenchmarking as well as performance profiling. The fact that the memory bandwidth as experienced by the application is different from the actual memory bandwidth is very difficult to diagnose without a HPM tool. After the optimization the performance profile is used to confirm and quantify the effect of the optimization.

Links and further information

- Likwid Wiki page for likwid-perfctr

- Intel Amplifier command line tool

- Wikipedia performance analysis tools list

- VI-HPS Tool list