Difference between revisions of "Scheduling Basics"

| (25 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| + | [[Category:Basics]] | ||

| + | This is an overview over the basic concepts/goals of a scheduler. An overview which HPC centers uses which scheduler, can be found [[Schedulers|here]]. Further Information about [[Batch-Scheduler|Batch-Schedulers]] are available, as well as specific information about the schedulers [[SLURM]], [[LSF]] and [[Torque]]. | ||

| + | |||

| + | __TOC__ | ||

| + | |||

== General == | == General == | ||

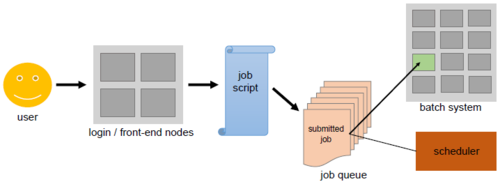

[[File:Batch_System.PNG|thumb|500px|Schematic of how users can access the batch system]] | [[File:Batch_System.PNG|thumb|500px|Schematic of how users can access the batch system]] | ||

| − | A scheduler is software that | + | A scheduler is software that implements a ''batch system'' on a HPC (cluster). Users do not run their calculations directly and interactively (as they do on their personal workstations or laptops), instead they submit non-interactive ''batch jobs'' to the scheduler.<br /> |

| + | The scheduler stores the batch jobs, evaluate their resource requirements and priorities, and distributes the jobs to suitable ''compute nodes''. These work horses make up the majority of HPC clusters (about 98%), being their most powerful, but also the most power consuming parts. | ||

| − | + | In contrast to the login nodes (for compiling and testing user software) and their interactive usage, these compute nodes are usually not directly accessible (via <code>ssh</code>). | |

| − | |||

| − | + | The scheduler is thus the interface for the users on the [[Nodes#Login|login nodes]] to send work to the compute nodes. | |

| − | + | This requires the user to ask the scheduler for time and memory resources and to specify the application inside a [[jobscript]]. | |

| − | |||

| + | This jobscript can then be submitted to the batch system via the scheduler, which will first add the job to a job queue. Based on the resources the job needs, the scheduler will decide when the job will leave the queue, and on which (part of the) back-end nodes it will run. | ||

| + | |||

| + | Be careful about the resources you request and know your system's limits. For example, if you | ||

| + | * demand less time than your job actually needs to finish, the scheduler will simply kill the job once the time allocated is up. | ||

| + | * specify more memory than there is available on the system, your job might be stuck in the queue forever. | ||

== Purpose == | == Purpose == | ||

| Line 28: | Line 37: | ||

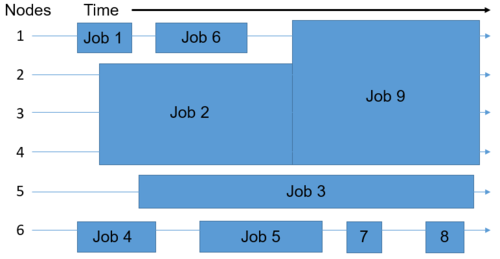

Assuming that the batch system you are using consists of 6 nodes, this is how the scheduler could place the nine jobs in the queue onto the available nodes. | Assuming that the batch system you are using consists of 6 nodes, this is how the scheduler could place the nine jobs in the queue onto the available nodes. | ||

| − | The goal is to eliminate the free areas | + | The goal is to eliminate wasted resources, which can be identified on the right by looking at the free areas depicting nodes without any job execution on them. |

Therefore, the jobs may not be distributed among the nodes in the same order in which they first entered the queue. | Therefore, the jobs may not be distributed among the nodes in the same order in which they first entered the queue. | ||

| − | The space that a job | + | The space that a job takes up is determined by the time and number of nodes required for executing it. |

| + | The schema to the right shows only ''two'' dimensions - ''nodes'' and ''time''. In reality, ''many'' parameters determine where and when the scheduler will place a job for execution (i.e. required runtime, required number of cores, required main memory, required special features like accelerators to name just a few). To put it simply: with all jobs' resource requirements being the dropping blocks and the available resources in the cluster being the confinement, the scheduler needs to play kind of "multidimensional tetris" to fill the cluster's nodes evenly and efficiently. | ||

== Scheduling Algorithms == | == Scheduling Algorithms == | ||

| − | There two very basic strategies that schedulers can use to determine which job to run next. Note that modern schedulers do not stick strictly to just one of these algorithms, but rather employ a combination of the two. | + | There are two very basic strategies that schedulers can use to determine which job to run next. Note that modern schedulers do not stick strictly to just one of these algorithms, but rather employ a combination of the two. |

Besides, there are many more aspects a scheduler has to take into consideration, e. g. the current system load. | Besides, there are many more aspects a scheduler has to take into consideration, e. g. the current system load. | ||

'''First Come, First Serve''' | '''First Come, First Serve''' | ||

| − | Jobs are run in the exact same order in which they first enter the queue. The advantage is that every job will definitely be run, however, very tiny jobs might wait for an inadequately long time compared to their actual execution time. | + | Jobs are run in the exact same order in which they first enter the queue. The advantage is that every job will definitely be run, however, very tiny jobs might wait for an inadequately long time compared to their actual execution time or all jobs would need to wait at some point if one user submits several thousand jobs at the same time. |

'''Shortest Job First''' | '''Shortest Job First''' | ||

| − | Based on the execution time | + | Based on the execution time declared in the [[jobscript]], the scheduler can estimate how long it will take to execute the job. Then, the jobs are ranked by that time from shortest to longest. While short jobs will start after a short waiting time, long running jobs (or at least jobs declared as such) might never actually start. |

| + | |||

| + | '''Backfilling''' | ||

| + | |||

| + | When backfilling the scheduler maintains the concept of "First Come, First Serve" without preventing long running jobs to execute. The scheduler checks whether the first job in the queue can be executed. If that is true, the job is executed without further delay. But if not, the scheduler goes through the rest of the queue to check whether another job can be executed without extending the waiting time of the first job in queue. If it finds such a job, the scheduler simply runs the job. Since jobs, which only need a few compute resources, are easily "backfillable", small jobs will usually encounter short queue times. | ||

Latest revision as of 13:06, 11 September 2024

This is an overview over the basic concepts/goals of a scheduler. An overview which HPC centers uses which scheduler, can be found here. Further Information about Batch-Schedulers are available, as well as specific information about the schedulers SLURM, LSF and Torque.

General

A scheduler is software that implements a batch system on a HPC (cluster). Users do not run their calculations directly and interactively (as they do on their personal workstations or laptops), instead they submit non-interactive batch jobs to the scheduler.

The scheduler stores the batch jobs, evaluate their resource requirements and priorities, and distributes the jobs to suitable compute nodes. These work horses make up the majority of HPC clusters (about 98%), being their most powerful, but also the most power consuming parts.

In contrast to the login nodes (for compiling and testing user software) and their interactive usage, these compute nodes are usually not directly accessible (via ssh).

The scheduler is thus the interface for the users on the login nodes to send work to the compute nodes.

This requires the user to ask the scheduler for time and memory resources and to specify the application inside a jobscript.

This jobscript can then be submitted to the batch system via the scheduler, which will first add the job to a job queue. Based on the resources the job needs, the scheduler will decide when the job will leave the queue, and on which (part of the) back-end nodes it will run.

Be careful about the resources you request and know your system's limits. For example, if you

- demand less time than your job actually needs to finish, the scheduler will simply kill the job once the time allocated is up.

- specify more memory than there is available on the system, your job might be stuck in the queue forever.

Purpose

Generally speaking, every scheduler has three main goals:

- minimize the time between the job submission and finishing the job: no job should stay in the queue for extensive periods of time

- optimize CPU utilization: the CPUs of the supercomputer are one of the core resources for a big application; therefore, there should only be few time slots where a CPU is not working

- maximize the job throughput: manage as many jobs per time unit as possible

Illustration

Assuming that the batch system you are using consists of 6 nodes, this is how the scheduler could place the nine jobs in the queue onto the available nodes. The goal is to eliminate wasted resources, which can be identified on the right by looking at the free areas depicting nodes without any job execution on them. Therefore, the jobs may not be distributed among the nodes in the same order in which they first entered the queue. The space that a job takes up is determined by the time and number of nodes required for executing it.

The schema to the right shows only two dimensions - nodes and time. In reality, many parameters determine where and when the scheduler will place a job for execution (i.e. required runtime, required number of cores, required main memory, required special features like accelerators to name just a few). To put it simply: with all jobs' resource requirements being the dropping blocks and the available resources in the cluster being the confinement, the scheduler needs to play kind of "multidimensional tetris" to fill the cluster's nodes evenly and efficiently.

Scheduling Algorithms

There are two very basic strategies that schedulers can use to determine which job to run next. Note that modern schedulers do not stick strictly to just one of these algorithms, but rather employ a combination of the two. Besides, there are many more aspects a scheduler has to take into consideration, e. g. the current system load.

First Come, First Serve

Jobs are run in the exact same order in which they first enter the queue. The advantage is that every job will definitely be run, however, very tiny jobs might wait for an inadequately long time compared to their actual execution time or all jobs would need to wait at some point if one user submits several thousand jobs at the same time.

Shortest Job First

Based on the execution time declared in the jobscript, the scheduler can estimate how long it will take to execute the job. Then, the jobs are ranked by that time from shortest to longest. While short jobs will start after a short waiting time, long running jobs (or at least jobs declared as such) might never actually start.

Backfilling

When backfilling the scheduler maintains the concept of "First Come, First Serve" without preventing long running jobs to execute. The scheduler checks whether the first job in the queue can be executed. If that is true, the job is executed without further delay. But if not, the scheduler goes through the rest of the queue to check whether another job can be executed without extending the waiting time of the first job in queue. If it finds such a job, the scheduler simply runs the job. Since jobs, which only need a few compute resources, are easily "backfillable", small jobs will usually encounter short queue times.