Difference between revisions of "OpenMP"

| (22 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

| − | OpenMP is an | + | [[Category:HPC-Developer]] |

| + | [https://www.openmp.org OpenMP] is an open standard API for Shared Memory [[Parallel_Programming|parallelization]] in C, C++ and Fortran which consist of compiler dircetives, runtime routines and environment variables. | ||

| + | The first version was published for Fortran in 1997 with the C/C++ standard following a year later. Version 2.0 was available in 2000 for Fortran and C/C++ in 2002, respectively. The version 2.5 from 2005 was the first combined specification for both Fortran and C/C++ . By then the main focus had been on parallelizing highly regular loops, which were considered the main performance hotspot for scientific programs. OpenMP 3.0 introduced the concept of tasking in 2008 while OpenMP 4.0 added support for accelerators in 2013. The current version OpenMP 5.0 was released in November 2018. As the implementation of sophisticated features can be cumbersome, not all achievements must be fully supported by all compilers and OSes. | ||

| + | |||

| + | Information on how to run an existing OpenMP program can be found in the "[[How to Use OpenMP]]"-Section. | ||

| + | |||

| + | __TOC__ | ||

== General == | == General == | ||

| − | OpenMP | + | |

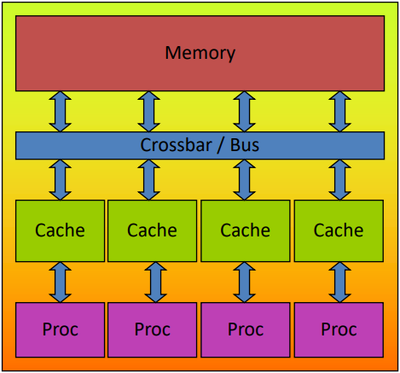

| + | In the OpenMP Shared-Memory Parallel Programming Model all processors/cores access a shared main memory and employ multiple threads. | ||

| + | |||

| + | [[File:OpenMPSharedMem.png|400px]] | ||

| + | |||

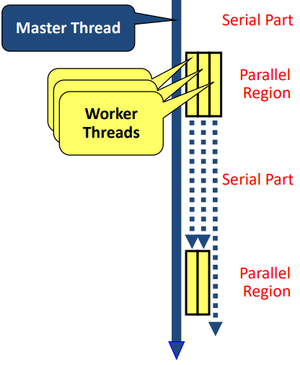

| + | A program is launched with one single thread as a master thread. When entering a parallel region, worker threads are spawned and form a team with the master. Upon leaving the parallel region these worker threads are typically put to sleep (instead of actual joining) by the OpenMP runtime until the program reaches its next parallel region. This Fork-Join concept allows incremental [[Parallel_Programming|parallelization]]: starting from serial code, the hot spots are parallelised one by one (typically still allowing serial compilation and execution). As a result all parallel regions must be defined explicitly. Furthermore there may only be exactly one entry point at the top of the region and exactly one exit point at the bottom and Branching out is not allowed. Termination, however, is allowed. The number of work threads to be spawned is controlled by an internal control variable, which value can be defined explicitely at the entry point. If this is not specified, OpenMP runtime will use the environment variable <code>OMP_NUM_THREADS</code>, and if it is not set choose a number of threads according implementation-defined rules (often: 'use all cores'). | ||

| + | |||

| + | [[File:OpenMPForkJoin.png|300px]] | ||

| + | |||

| + | == OpenMP Constructs == | ||

| + | |||

| + | === Parallel regions === | ||

| + | |||

| + | OpenMP functionalities are added to a program by use of pragmas. For example, the following pragma defines a parallel region with 4 threads. | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''C/C++''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | #pragma omp parallel num_threads(4) | ||

| + | { | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | | '''Fortran''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | !$omp parallel num_threads(4) | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | !$omp end parallel | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | === For Worksharing === | ||

| + | |||

| + | In the above program all threads would execute the entire structured block in parallel. Obviously, this does not result in a speedup of the program unless worksharing is applied. The most commonly used OpenMP Worksharing construct is ''for'', which allows splitting up loops and distributing the work over all threads. | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''C/C++''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | int i; | ||

| + | #pragma omp parallel num_threads(4) | ||

| + | { | ||

| + | #pragma omp for | ||

| + | for (i = 0; i < 100; i++) | ||

| + | { | ||

| + | a[i] = b[i] + c[i]; | ||

| + | } | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | | '''Fortran''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | INTEGER :: i | ||

| + | !$omp parallel num_threads(4) | ||

| + | !$omp do | ||

| + | DO i = 0, 99 | ||

| + | a[i] = b[i] + c[i] | ||

| + | END DO | ||

| + | !$omp end parallel | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | In the above example with 100 iterations and 4 threads the iterations would be split into 4 equally sized chunks (0,24), (25,49), (50,74) and (75,99). Other distributions can be specified by using the ''schedule clause'': | ||

| + | |||

| + | * <code>schedule(static [, chunk])</code>: Iteration space divided in to blocks of size chunk and assigned to threads in a round-robin fashion. (default #threads blocks) | ||

| + | * <code>schedule(dynamic [, chunk])</code>: Iteration space split into blocks of size chunk (default 1) and assigned to threads in the order in which they finish previous blocks | ||

| + | * <code>schedule(guided, [, chunk])</code>: similar to dynamic, but block size decreases exponentially from an implementation-defined value to chunk | ||

| + | |||

| + | |||

| + | However, not all loops can be parallelized this way. In order to split the workload as shown there must not be any dependencies between the iterations, i.e. no thread may write to a memory location that another thread reads from. If in doubt, check if a backward exectuion of the loop would have the same result. Pleas note that this simple test is only a necessary condition that works for most cases, but not a sufficient condition! | ||

| + | |||

| + | === Data Scoping === | ||

| + | |||

| + | In OpenMP, variables are divided into shared and private. This can be set when creating a parallel region or ''for''-construct. The default for parallel regions is shared for all already-existing variables while all variables created within a parallel region are private. Static variables are also shared. The following options are available: | ||

| + | |||

| + | * <code>shared(VariableList)</code>: one shared instance that can be read from and written to by all threads | ||

| + | * <code>private(VariableList)</code>: a new uninitialized instance is created for each thread | ||

| + | * <code>firstprivate(VariableList)</code>: a new instance is created for each thread and initialized with the Master's value | ||

| + | * <code>lastprivate(VariableList)</code>: a new uninitialized instance is created for each thread and the value of the last loop iteration is written back to the Master | ||

| + | |||

| + | Global/static variables can also be privatized by using <code>#pragma omp threadprivate(VariableList)</code> or <code>!$omp threadprivate(VariableList)</code>. This creates an instance for each thread ''before'' the first parallel region is reached which will exist until the program finishes. Please note that this does not work too well with nested parallel regions, so it is recommended to avoid using threadprivate and static variables. | ||

| + | |||

| + | === Synchronization === | ||

| + | |||

| + | The utilization of multiple threads requires a certain amount of synchronization to maintain correctness. OpenMP offers the Barrier construct to ensure all threads work in the same section of the program. Implicit or explicit barriers can be set with <code>#pragma omp barrier</code>. Additionally, all Workshare constructs provided by OpenMP contain an implicit barrier at the end. | ||

| + | |||

| + | Options such as critical sections should be treated with caution when using OpenMP, as they may strongly decrease the programs speedup and scaling by forcing all threads to run almost serial. If there is a data dependency between iterations of a for-loop where for example the results of all iterations should be summed up and stored in one variable the operation of choice would be the ''reduction''-clause. This creates a private instance of the shared variable that is to receive the final result for each threads which are then combined once all threads have finished. The available reduction values and their initialization values are: | ||

| + | |||

| + | * <code>+ (0)</code>, <code>* (1)</code>, <code>- (0)</code> | ||

| + | * <code>& (~0)</code>, <code>| (0)</code>, <code>&& (0)</code>, <code>|| (0)</code>, <code>^ (0)</code> | ||

| + | * <code>min (largest number)</code>, <code>max (smallest number)</code> | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''C/C++''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | int i, s=0; | ||

| + | #pragma omp parallel num_threads(4) | ||

| + | { | ||

| + | #pragma omp for reduction (+:sum) | ||

| + | for (i = 0; i < 100; i++) | ||

| + | { | ||

| + | sum = sum + a[i]; | ||

| + | } | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | | '''Fortran''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | INTEGER :: i | ||

| + | INTEGER :: sum | ||

| + | !$omp parallel num_threads(4) | ||

| + | !$omp do reduction(+:sum) | ||

| + | DO i = 0, 99 | ||

| + | sum = sum + a[i] | ||

| + | END DO | ||

| + | !$omp end parallel | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | === Single and Master === | ||

| + | |||

| + | Most programs contain several parts where parallelization is impossible or unnecessary, e.g. I/O or memory allocation/deallocation. However, if these parts are rather small and right in between two larger parallel regions putting the worker threads to sleep and reinstating them may cause a significant overhead. In these cases it is advisable to use the single or master constructs. | ||

| + | |||

| + | The single construct encloses a structured block which may only be executed by exactly one thread of the team while the others wait at the implicit barrier at its end. The runtime decides which thread this will be, e.g. the first that reaches the section. | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''C/C++''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | #pragma omp single | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | | '''Fortran''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | !$omp single | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | !$omp end single | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | The master construct encloses a structured block which may only be executed by the master thread. Please notice that the master construct is ''not'' a workshare construct and therefore does ''not'' contain an implicit barrier at its end. | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''C/C++''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | #pragma omp master | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | | '''Fortran''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | !$omp master | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | !$omp end master | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | === Tasking === | ||

| + | |||

| + | A task is a structured block enclosed by the following pragma which may be executed by any member of the thread team. | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''C/C++''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | #pragma omp task | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | | '''Fortran''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | !$omp task | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | !$omp end task | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | Clauses that can be used with the task construct are: | ||

| + | |||

| + | * Data scoping: private(list), firstprivate(list), shared(list), default(shared|none), in_reduction(r-id: list) | ||

| + | * Dependencies: depend(dep-type: list)) | ||

| + | * Cutoff Strategies: if(expression), mergeable, final(expression) | ||

| + | * Priorities: priority(priority-value) | ||

| + | * untied (more than one thread may execute this structured block) | ||

| + | |||

| + | Similarly to parallel regions static and global variables are shared for tasks while local variables are private. Variables of orphaned tasks are firstprivate by default, while those of non-orphaned tasks are shared. The cutoff strategies listed above are used to optimize task creation and execution. They may reduce parallelism but also the amount of tasks to finish as tasks with an if-clause which evaluates to false are suspended immediatly (''undeferred tasks''). Tasks are especially useful for recursive parts with bad [[Load Balancing]] but do add some overhead which will become noticable if the tasks become too fine-grained. | ||

| + | |||

| + | A popular example where tasking performs much better than threading is the recursive approach to Fibonacci: | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''Serial''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | int main(int argc, char* argv[]) | ||

| + | { | ||

| + | ... | ||

| + | fib(42) | ||

| + | ... | ||

| + | } | ||

| + | |||

| + | int fib(int n) | ||

| + | { | ||

| + | if (n < 2) return n; | ||

| + | int x = fib(n-1); | ||

| + | int y = fib(n-2); | ||

| + | return x+y; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |style="width: 50%; vertical-align:top"|'''Notes''' | ||

| + | |||

| + | |||

| + | Enclosing the calling of the recursive function fib in main with a parallel region will simply result in fib being called by multiple threads who then all do duplicated work. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | Trying to parallelize fib itself only with threads proves to be rather tricky. As threads cannot pause there current work one is bound to end up in a deadlock, where all threads wait for the others to finish. | ||

| + | |- | ||

| + | | '''Parallelized with Tasking''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | int main(int argc, char* argv[]) | ||

| + | { | ||

| + | ... | ||

| + | #pragma omp parallel | ||

| + | { | ||

| + | #pragma omp single | ||

| + | { | ||

| + | fib(42) | ||

| + | } | ||

| + | } | ||

| + | ... | ||

| + | } | ||

| + | |||

| + | int fib(int n) | ||

| + | { | ||

| + | if (n < 2) return n; | ||

| + | if (n <= 30) return serfib(n); | ||

| + | int x,y; | ||

| + | #pragma omp task shared(x) | ||

| + | { | ||

| + | x = fib(n-1); | ||

| + | } | ||

| + | #pragma omp task shared(y) | ||

| + | { | ||

| + | y = fib(n-2); | ||

| + | } | ||

| + | #pragma omp taskwait | ||

| + | { | ||

| + | return x+y; | ||

| + | } | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |style="width: 50%; vertical-align:top"|'''Notes''' | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | <code>#pragma omp single</code>: As mentioned before, calling fib with multiple threads results in duplicated, not parallel work. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | <code>if (n <= 30) return serfib(n)</code>: serfib refers to the serial version of fib. This threshold greatly increases the performance, as tasks with smaller input become too fine-grained and therefore the overhead overshadows the speedup by parallelization. | ||

| + | |||

| + | |||

| + | <code>#pragma omp task shared(x)</code>: 2 tasks are created in every recursive call which will be picked up and executed by whichever thread finishes at the time. Tasks can be put on halt in favor of others so the deadlock scenario is avoided. | ||

| + | |||

| + | |||

| + | <code>#pragma omp taskwait</code>: The taskwait before summing up the result is essential to ensure that all tasks descending the one in question have finished before summing up and passing on the result. | ||

| + | |||

| + | |} | ||

| + | |||

| + | |||

| + | |||

| + | Tasks are tied to the thread which executes them first and not their creator. Once tied they may only be executed by the very same thread and can only be suspended at task scheduling points (creation, finish, <code>taskwait</code>, <code>barrier</code>, <code>taskyield</code>). <code>taskyield</code> may be used for optimization and deadlock prevention, enabling the suspension of a task in favor of other tasks. Until suspension or completion of a task the executing thread may only switch to a direct descendant of all the task tied to it. When using the ''untied''-clause, a task may be halted at scheduling points and later resumed by the same or another thread. While this grants a lot of opportunities it also enables a lot of interesting and unexpected results. Once again, one should especially avoid the use of <code>threadprivate</code> and also the use of thread-ids. Critical regions and locks can also cause plenty of problems. If in doubt, a safer alternative would be using <code>#pragma omp task if(0)</code>. | ||

| + | |||

| + | |||

| + | |||

| + | == Examples for OpenMP programs == | ||

| + | |||

| + | Hello World: | ||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

| + | #include <stdio.h> | ||

| + | |||

int main(int argc, char* argv[]) | int main(int argc, char* argv[]) | ||

{ | { | ||

#pragma omp parallel | #pragma omp parallel | ||

| − | printf(" | + | { |

| + | printf("Hello World!\n"); | ||

| + | } | ||

return 0; | return 0; | ||

| Line 13: | Line 328: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | interpreted by a normal compiler as comments, these will only come into effect when a specific [[compiler]] (options) is utilized like detailed [[How_to_Use_OpenMP|here]] | + | interpreted by a normal compiler as comments, these will only come into effect when a specific [[compiler]] (options) is utilized like detailed [[How_to_Use_OpenMP|here]]. |

| − | + | Please check the more detailed tutorials in the References. | |

| − | |||

| − | [ | + | == Teaching material == |

| + | === Online Tutorials === | ||

| + | * [[OpenMP_in_Small_Bites|OpenMP in Small Bites]] | ||

| − | [https://doc.itc.rwth-aachen.de/download/attachments/35947076/03_OpenMPNumaSimd.pdf Introduction to OpenMP from PPCES (@RWTH Aachen) Part 3: NUMA & SIMD] | + | === Workshop slides === |

| + | * [https://doc.itc.rwth-aachen.de/download/attachments/35947076/01_IntroductionToOpenMP.pdf Introduction to OpenMP from PPCES (@RWTH Aachen) Part 1: Introduction] | ||

| + | * [https://doc.itc.rwth-aachen.de/download/attachments/35947076/02_OpenMPTaskingInDepth.pdf Introduction to OpenMP from PPCES (@RWTH Aachen) Part 2: Tasking in Depth] | ||

| + | * [https://doc.itc.rwth-aachen.de/download/attachments/35947076/03_OpenMPNumaSimd.pdf Introduction to OpenMP from PPCES (@RWTH Aachen) Part 3: NUMA & SIMD] | ||

| + | * [https://doc.itc.rwth-aachen.de/download/attachments/35947076/04_OpenMPSummary.pdf Introduction to OpenMP from PPCES (@RWTH Aachen) Part 4: Summary] | ||

| − | [https:// | + | == Books == |

| + | * [https://mitpress.mit.edu/books/using-openmp R. van der Pas, B. Chapman, G. Jost: “Using OpenMP”, MIT Press. ISBN-10: 0262533022 / ISBN-13: 978-0262533027] | ||

| + | * [https://mitpress.mit.edu/books/using-openmp-next-step R. van der Pas, E. Stotzer and C. Terboven: “Using OpenMP - The Next Step”, MIT Press. ISBN: 9780-262534789] | ||

| + | * [https://www.springer.com/gp/book/9783540731221 S. Hoffmann, R.Lienhart: “OpenMP - Eine Einführung in die parallele Programmierung mit C/C++”, Springer, 2008. ISBN-13 978-3540731238] | ||

| + | * [https://www.openmp.org/specifications/ OpenMP Specifications] | ||

Latest revision as of 13:27, 6 November 2020

OpenMP is an open standard API for Shared Memory parallelization in C, C++ and Fortran which consist of compiler dircetives, runtime routines and environment variables. The first version was published for Fortran in 1997 with the C/C++ standard following a year later. Version 2.0 was available in 2000 for Fortran and C/C++ in 2002, respectively. The version 2.5 from 2005 was the first combined specification for both Fortran and C/C++ . By then the main focus had been on parallelizing highly regular loops, which were considered the main performance hotspot for scientific programs. OpenMP 3.0 introduced the concept of tasking in 2008 while OpenMP 4.0 added support for accelerators in 2013. The current version OpenMP 5.0 was released in November 2018. As the implementation of sophisticated features can be cumbersome, not all achievements must be fully supported by all compilers and OSes.

Information on how to run an existing OpenMP program can be found in the "How to Use OpenMP"-Section.

General

In the OpenMP Shared-Memory Parallel Programming Model all processors/cores access a shared main memory and employ multiple threads.

A program is launched with one single thread as a master thread. When entering a parallel region, worker threads are spawned and form a team with the master. Upon leaving the parallel region these worker threads are typically put to sleep (instead of actual joining) by the OpenMP runtime until the program reaches its next parallel region. This Fork-Join concept allows incremental parallelization: starting from serial code, the hot spots are parallelised one by one (typically still allowing serial compilation and execution). As a result all parallel regions must be defined explicitly. Furthermore there may only be exactly one entry point at the top of the region and exactly one exit point at the bottom and Branching out is not allowed. Termination, however, is allowed. The number of work threads to be spawned is controlled by an internal control variable, which value can be defined explicitely at the entry point. If this is not specified, OpenMP runtime will use the environment variable OMP_NUM_THREADS, and if it is not set choose a number of threads according implementation-defined rules (often: 'use all cores').

OpenMP Constructs

Parallel regions

OpenMP functionalities are added to a program by use of pragmas. For example, the following pragma defines a parallel region with 4 threads.

C/C++

#pragma omp parallel num_threads(4)

{

...

structured block

...

}

|

Fortran

!$omp parallel num_threads(4)

...

structured block

...

!$omp end parallel

|

For Worksharing

In the above program all threads would execute the entire structured block in parallel. Obviously, this does not result in a speedup of the program unless worksharing is applied. The most commonly used OpenMP Worksharing construct is for, which allows splitting up loops and distributing the work over all threads.

C/C++

int i;

#pragma omp parallel num_threads(4)

{

#pragma omp for

for (i = 0; i < 100; i++)

{

a[i] = b[i] + c[i];

}

}

|

Fortran

INTEGER :: i

!$omp parallel num_threads(4)

!$omp do

DO i = 0, 99

a[i] = b[i] + c[i]

END DO

!$omp end parallel

|

In the above example with 100 iterations and 4 threads the iterations would be split into 4 equally sized chunks (0,24), (25,49), (50,74) and (75,99). Other distributions can be specified by using the schedule clause:

schedule(static [, chunk]): Iteration space divided in to blocks of size chunk and assigned to threads in a round-robin fashion. (default #threads blocks)schedule(dynamic [, chunk]): Iteration space split into blocks of size chunk (default 1) and assigned to threads in the order in which they finish previous blocksschedule(guided, [, chunk]): similar to dynamic, but block size decreases exponentially from an implementation-defined value to chunk

However, not all loops can be parallelized this way. In order to split the workload as shown there must not be any dependencies between the iterations, i.e. no thread may write to a memory location that another thread reads from. If in doubt, check if a backward exectuion of the loop would have the same result. Pleas note that this simple test is only a necessary condition that works for most cases, but not a sufficient condition!

Data Scoping

In OpenMP, variables are divided into shared and private. This can be set when creating a parallel region or for-construct. The default for parallel regions is shared for all already-existing variables while all variables created within a parallel region are private. Static variables are also shared. The following options are available:

shared(VariableList): one shared instance that can be read from and written to by all threadsprivate(VariableList): a new uninitialized instance is created for each threadfirstprivate(VariableList): a new instance is created for each thread and initialized with the Master's valuelastprivate(VariableList): a new uninitialized instance is created for each thread and the value of the last loop iteration is written back to the Master

Global/static variables can also be privatized by using #pragma omp threadprivate(VariableList) or !$omp threadprivate(VariableList). This creates an instance for each thread before the first parallel region is reached which will exist until the program finishes. Please note that this does not work too well with nested parallel regions, so it is recommended to avoid using threadprivate and static variables.

Synchronization

The utilization of multiple threads requires a certain amount of synchronization to maintain correctness. OpenMP offers the Barrier construct to ensure all threads work in the same section of the program. Implicit or explicit barriers can be set with #pragma omp barrier. Additionally, all Workshare constructs provided by OpenMP contain an implicit barrier at the end.

Options such as critical sections should be treated with caution when using OpenMP, as they may strongly decrease the programs speedup and scaling by forcing all threads to run almost serial. If there is a data dependency between iterations of a for-loop where for example the results of all iterations should be summed up and stored in one variable the operation of choice would be the reduction-clause. This creates a private instance of the shared variable that is to receive the final result for each threads which are then combined once all threads have finished. The available reduction values and their initialization values are:

+ (0),* (1),- (0)& (~0),| (0),&& (0),|| (0),^ (0)min (largest number),max (smallest number)

C/C++

int i, s=0;

#pragma omp parallel num_threads(4)

{

#pragma omp for reduction (+:sum)

for (i = 0; i < 100; i++)

{

sum = sum + a[i];

}

}

|

Fortran

INTEGER :: i

INTEGER :: sum

!$omp parallel num_threads(4)

!$omp do reduction(+:sum)

DO i = 0, 99

sum = sum + a[i]

END DO

!$omp end parallel

|

Single and Master

Most programs contain several parts where parallelization is impossible or unnecessary, e.g. I/O or memory allocation/deallocation. However, if these parts are rather small and right in between two larger parallel regions putting the worker threads to sleep and reinstating them may cause a significant overhead. In these cases it is advisable to use the single or master constructs.

The single construct encloses a structured block which may only be executed by exactly one thread of the team while the others wait at the implicit barrier at its end. The runtime decides which thread this will be, e.g. the first that reaches the section.

C/C++

#pragma omp single

...

structured block

...

|

Fortran

!$omp single

...

structured block

...

!$omp end single

|

The master construct encloses a structured block which may only be executed by the master thread. Please notice that the master construct is not a workshare construct and therefore does not contain an implicit barrier at its end.

C/C++

#pragma omp master

...

structured block

...

|

Fortran

!$omp master

...

structured block

...

!$omp end master

|

Tasking

A task is a structured block enclosed by the following pragma which may be executed by any member of the thread team.

C/C++

#pragma omp task

...

structured block

...

|

Fortran

!$omp task

...

structured block

...

!$omp end task

|

Clauses that can be used with the task construct are:

- Data scoping: private(list), firstprivate(list), shared(list), default(shared|none), in_reduction(r-id: list)

- Dependencies: depend(dep-type: list))

- Cutoff Strategies: if(expression), mergeable, final(expression)

- Priorities: priority(priority-value)

- untied (more than one thread may execute this structured block)

Similarly to parallel regions static and global variables are shared for tasks while local variables are private. Variables of orphaned tasks are firstprivate by default, while those of non-orphaned tasks are shared. The cutoff strategies listed above are used to optimize task creation and execution. They may reduce parallelism but also the amount of tasks to finish as tasks with an if-clause which evaluates to false are suspended immediatly (undeferred tasks). Tasks are especially useful for recursive parts with bad Load Balancing but do add some overhead which will become noticable if the tasks become too fine-grained.

A popular example where tasking performs much better than threading is the recursive approach to Fibonacci:

Serial

int main(int argc, char* argv[])

{

...

fib(42)

...

}

int fib(int n)

{

if (n < 2) return n;

int x = fib(n-1);

int y = fib(n-2);

return x+y;

}

|

Notes

|

Parallelized with Tasking

int main(int argc, char* argv[])

{

...

#pragma omp parallel

{

#pragma omp single

{

fib(42)

}

}

...

}

int fib(int n)

{

if (n < 2) return n;

if (n <= 30) return serfib(n);

int x,y;

#pragma omp task shared(x)

{

x = fib(n-1);

}

#pragma omp task shared(y)

{

y = fib(n-2);

}

#pragma omp taskwait

{

return x+y;

}

}

|

Notes

|

Tasks are tied to the thread which executes them first and not their creator. Once tied they may only be executed by the very same thread and can only be suspended at task scheduling points (creation, finish, taskwait, barrier, taskyield). taskyield may be used for optimization and deadlock prevention, enabling the suspension of a task in favor of other tasks. Until suspension or completion of a task the executing thread may only switch to a direct descendant of all the task tied to it. When using the untied-clause, a task may be halted at scheduling points and later resumed by the same or another thread. While this grants a lot of opportunities it also enables a lot of interesting and unexpected results. Once again, one should especially avoid the use of threadprivate and also the use of thread-ids. Critical regions and locks can also cause plenty of problems. If in doubt, a safer alternative would be using #pragma omp task if(0).

Examples for OpenMP programs

Hello World:

#include <stdio.h>

int main(int argc, char* argv[])

{

#pragma omp parallel

{

printf("Hello World!\n");

}

return 0;

}

interpreted by a normal compiler as comments, these will only come into effect when a specific compiler (options) is utilized like detailed here.

Please check the more detailed tutorials in the References.

Teaching material

Online Tutorials

Workshop slides

- Introduction to OpenMP from PPCES (@RWTH Aachen) Part 1: Introduction

- Introduction to OpenMP from PPCES (@RWTH Aachen) Part 2: Tasking in Depth

- Introduction to OpenMP from PPCES (@RWTH Aachen) Part 3: NUMA & SIMD

- Introduction to OpenMP from PPCES (@RWTH Aachen) Part 4: Summary

Books

- R. van der Pas, B. Chapman, G. Jost: “Using OpenMP”, MIT Press. ISBN-10: 0262533022 / ISBN-13: 978-0262533027

- R. van der Pas, E. Stotzer and C. Terboven: “Using OpenMP - The Next Step”, MIT Press. ISBN: 9780-262534789

- S. Hoffmann, R.Lienhart: “OpenMP - Eine Einführung in die parallele Programmierung mit C/C++”, Springer, 2008. ISBN-13 978-3540731238

- OpenMP Specifications