Difference between revisions of "Scheduling Basics"

| Line 1: | Line 1: | ||

== General == | == General == | ||

| + | |||

| + | [[File:Batch_System.PNG|thumb|300px|Schematic of how users can access the batch system]] | ||

A scheduler is software that controls a batch system. Such a batch system makes up the majority of a cluster (about 98%) and is the most powerful and power-consuming part. This is where the big applications are intended to run, where the computation requires a lot of memory and time for computation. | A scheduler is software that controls a batch system. Such a batch system makes up the majority of a cluster (about 98%) and is the most powerful and power-consuming part. This is where the big applications are intended to run, where the computation requires a lot of memory and time for computation. | ||

Revision as of 15:26, 29 March 2018

General

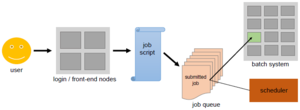

A scheduler is software that controls a batch system. Such a batch system makes up the majority of a cluster (about 98%) and is the most powerful and power-consuming part. This is where the big applications are intended to run, where the computation requires a lot of memory and time for computation.

Such a batch system can be viewed as the counterpart of the login-nodes. A user can simply log onto those so called "front-end" nodes and you can type in commands that are immediately executed on the same machine. A batch system, however, consists of "back-end" nodes that are not directly accessible for the users. In order to run an application on there, the user has to ask for time and memory resources and specify which application to run inside a jobscript.

This jobscript can be submitted to the batch system, that is, sending the jobscript from the front-end nodes to the back-end nodes. Any job will first be added to a queue. Based on the resources the job needs, the scheduler will decide when the job will leave the queue, and on which part of the back-end nodes it will run.

Be careful about the resources you request and know your system's limits: If you demand less time than your job actually needs to finish, your scheduler will simply kill the job once the given time is up. Your job might be stuck in the queue forever, if you specify more memory than actually available.

Purpose

Generally speaking, every scheduler has three main goals:

- minimize the time between the job submission and finishing the job: no job should stay in the queue for extensive periods of time

- optimize CPU utilization: the CPUs of the supercomputer are one of the core resources for a big application; therefore, there should only be few time slots where a CPU is not working

- maximize the job throughput: manage as many jobs per time unit as possible

Illustration

Scheduling Algorithms

There two very basic stragedies that schedulers can use to determine which job to run next. Note that modern schedulers do not stick strictly to just one of these algorithms, but rather employ a combination of the two. Besides, there are many more aspects a scheduler has to take into consideration, e. g. the current system load.

First Come, First Serve

Jobs are run in the exact same order in which they first enter the queue. The advantage is that every job will definitely be run, however, very tiny jobs might wait for an inadequately long time compared to their actual execution time.

Shortest Job First

Based on the execution time and memory declared in the jobscript, the scheduler can roughly estimate how long it will take to execute the job. Then, the jobs are ranked by that estimated time.