Difference between revisions of "MPI"

(→MPI+X) |

(→�) |

||

| Line 163: | Line 163: | ||

[https://doc.itc.rwth-aachen.de/download/attachments/35947076/03_PPCES2018_MPI_Tutorial.pdf Introduction to MPI from PPCES (@RWTH Aachen) Part 3] | [https://doc.itc.rwth-aachen.de/download/attachments/35947076/03_PPCES2018_MPI_Tutorial.pdf Introduction to MPI from PPCES (@RWTH Aachen) Part 3] | ||

| + | |||

| + | [https://www.mpi-forum.org/docs/ MPI language standard]. The standard is not suited for learning MPI but is useful as a reference document ctonaining many source code examples. | ||

Revision as of 12:38, 27 February 2019

The Message Passing Interface (MPI) is an open standard for distributed memory parallelization. It consists of a library API (Application Programmer Interface) specification for C and Fortran. There exist unofficial language bindings for many other programming languages. The first standard document was released in 1994. MPI has become the de-facto standard to program HPC cluster systems and is often the only way available. There exist many optimized implementations, Open source and proprietary. The latest version of the standard is MPI 3.1 (released in 2015).

MPI allows to write portable parallel programs for all kinds of parallel systems, from small shared memory nodes to petascale cluster systems. While many criticize its bloated API and complicated function interface no alternative proposal could win a significant share in the HPC application domain so far. There exist optimized implementations for any platform and architecture and a wealth of tools and libraries. Common implementations are OpenMPI, mpich and Intel MPI. Because MPI is available for such a long time and almost every HPC application is implemented using MPI it is the safest bet for a solution that will be supported and stable on mid- to long-term future systems.

Information on how to run an existing MPI program can be found in the How to Use MPI Section.

API overview

The standard specifies interfaces to the following functionality (list is not complete):

- Point-to-point communication,

- Datatypes,

- Collective operations,

- Process groups,

- Process topologies,

- One-sided communication,

- Parallel file I/O.

While the standard document has 836 pages describing 500+ MPI functions a working and useful MPI program can be implemented using just a handful of functions. As with other standards new and uncommon features are often not implemented efficiently in available MPI libraries.

Basic conventions

A process is the smallest worker granularity in MPI. MPI offers a very generic and flexible way to manage subgroups of parallel workers using so called communicators. A communicator is part of any MPI communication routine signature. Common practice is that there exists a predefined communicator called MPI_COMM_WORLD including all processes within a job. It is possible to create a subset of processes in new communicators. Still many applications can be implemented using only MPI_COMM_WORLD. Processes are assign consecutive ranks (integer number) and a process can be asked for its rank and the total number of ranks in a communicator within the program. This information is already sufficient to create work sharing strategies and communication structures. Messages can be send to another rank using its ID, collective communication (as e.g. broadcast) involve all processes in a communicator. MPI follows the multiple program multiple data (MPMD) programming model which allows to use separate source codes for the different processes. However it is common practice to use a single source for an MPI application.

Point-to-point communication

Sending and receiving of messages by processes is the basic MPI communication mechanism. The basic point-to-point communication operations are send and receive.

MPI_Sendfor sending a message

int MPI_Send (const void* buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm)

MPI_Recvfor receiving a message

int MPI_Recv (void* buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status* status)

MPI communication routines consist of the message data and a message envelope. The message data is specified by a pointer to a memory buffer, the MPI datatype and a count. Count may be zero to indicate that the message buffer is empty. The message envelope consists of the source (implicitly specified by the sender), destination, tag and communicator. Destination is the id of the receiving process and tag an integer which allows to distinguish different message types.

Above functions (which are normal mode blocking in MPI speak) are the simplest example of point-to-point communication. In the standard there exist two basic flavors of point-to-point comunication: blocking and non-blocking. Blocking means that the communication buffer passed as an argument to the communication routine may be reused after the call returns, whereas in non-blocking the buffer must not be used until a special completion test was called. One could say that in the non-blocking flavor the potential for asynchronous communication is exposed to the API. Blocking and non-blocking sends and receives can be mixed with each other. To complicate things further the standard introduces different communication modes: normal, buffered, synchronous and ready. Please refer to the references for more details on those topics. While making general recommendation is dangerous one can say that in many cases using the normal non-blocking variant for point-top-point communication is no mistake.

Collective operations

MPI supports collective communication primitives for cases where all ranks are involved in communication. Collective communication does not interefere in any way with point-to-point communication. All ranks within a communicator have to call the routine. While collective communication routines are often necessary and helpful they also may introduce potential scalability problems. Therefore they should be used with great care.

There exist three types of collective communication:

- Synchronization (barrier)

- Data movement (e.g. gather, scatter, broadcast)

- Collective computation (reduction)

Example for collective communication:

Examples for MPI programs

Hello World with MPI

#include <stdio.h>

#include <mpi.h>

main(int argc, char **argv)

{

int ierr, num_procs, my_id;

ierr = MPI_Init(&argc, &argv);

ierr = MPI_Comm_rank(MPI_COMM_WORLD, &my_id);

ierr = MPI_Comm_size(MPI_COMM_WORLD, &num_procs);

printf("Hello world! I'm process %i out of %i processes\n",

my_id, num_procs);

ierr = MPI_Finalize();

}

Integration example: Blocking P2P communication

Integration example: Non-Blocking P2P communication

Integration example: Collective communication

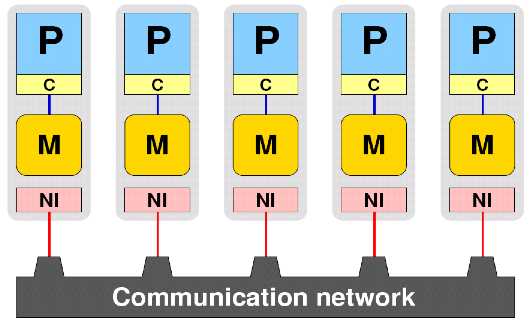

MPI machine model

MPI is using the following machine model:

All processors P do not share any topological entity with each other and are equal in every respect. They are connected using one uniform network. This basic machine model captures all possible parallel machines. Still many performance relevant features of real parallel systems are not represented: Cluster of SMP structure, shared memory hierarchy, shared network interface, network topology. While optimized MPI implementations try to provide the best possible performance for each communication case, the fact that communication between two partners is not equal across a MPI communicator cannot be solved and has to be explicitly addressed by the programmer.

MPI+X

Because there usually already exists a large amount of parallelism on the node level and MPI with a process as finest granularity adds significant overhead a common strategy is to mix MPI with some other more light weight programming model. For example for the common case of a cluster with large shared memory nodes it is natural to combine MPI with OpenMP.

Mixing MPI another programming model in any case adds significant complexity on the implementation level as well as later when running the application. There must therefore be a good reason for going hybrid. If an MPI code scales very well one should not try to be better with an hybrid code.

Common reasons for going hybrid could be:

- Explicitly overlapping communication with computation

- Improve MPI performance through larger messages

- Reuse of data in shared caches

- Easier load balancing with OpenMP

- Exploit additional levels of parallelism (vector processor codes, parallel structures where MPI overhead is too large for parallelization)

- Improve convergence if you parallelize loop-carried dependencies, e.g. domain decomposition with a implicit solver

Alternatives to MPI

References

Introduction to MPI from PPCES (@RWTH Aachen) Part 1

Introduction to MPI from PPCES (@RWTH Aachen) Part 2

Introduction to MPI from PPCES (@RWTH Aachen) Part 3

MPI language standard. The standard is not suited for learning MPI but is useful as a reference document ctonaining many source code examples.