Difference between revisions of "OpenMP"

m |

|||

| Line 1: | Line 1: | ||

| − | OpenMP is an open standard for Shared Memory [[Parallel_Programming|parallelization]]. Information on how to run an existing OpenMP program can be found in the "[[How to Use OpenMP]]"-Section. | + | OpenMP is an open standard API for Shared Memory [[Parallel_Programming|parallelization]] in C, C++ and Fortran which consist of compiler dircetives, runtime routines and environment variables. |

| + | The first version was published for Fortran in 1997 with the C/C++ standard following a year later. In 2000, OpenMP 2.0 was introduced. For the first two versions the main focus had been on parallelizing highly regular loops, which were considered the main performance limitation for scientific programs. OpenMP 3.0 introduced the concept of tasking in 2008 while OpenMP 4.0 added support for accelerators in 2013. The current version OpenMP 5.0 was released only in November 2018 and is therefore not yet fully supported by all compilers and OSes. | ||

| + | |||

| + | Information on how to run an existing OpenMP program can be found in the "[[How to Use OpenMP]]"-Section. | ||

| + | |||

| + | __TOC__ | ||

== General == | == General == | ||

| + | |||

| + | In the OpenMP Shared-Memory Parallel Programming Model all processors/cores access a shared main memory and employ multiple threads. | ||

| + | |||

| + | [[File:OpenMPSharedMem.png|400px]] | ||

| + | |||

| + | A program is launched with one single thread as a master thread. When entering a parallel region, worker threads are spawned and form a team with the master. Upon leaving the parallel region these worker threads are put to sleep by the OpenMP runtime until the program reaches its next parallel region. This concept is known as Fork-Join and it allows incremental [[Parallel_Programming|parallelization]]. As a result all parallel regions must be defined explicitly. Furthermore there may only be exactly one entry point at the top of the region and exactly one exit point at the bottom and Branching out is not allowed. Termination, however, is allowed. The number of work threads to be spawned must be defined at the entry point. If it is not specified, OpenMP will use the environment variable <code>OMP_NUM_THREADS</code>. | ||

| + | |||

| + | [[File:OpenMPForkJoin.png|300px]] | ||

| + | |||

| + | == OpenMP Constructs == | ||

| + | |||

| + | === Parallel regions === | ||

| + | |||

| + | OpenMP functionalities are added to a program by use of pragmas. For example, the following pragma defines a parallel region with 4 threads. | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''C/C++''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | #pragma omp parallel num_threads(4) | ||

| + | { | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | | '''Fortran''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | !$omp parallel num_threads(4) | ||

| + | ... | ||

| + | structured block | ||

| + | ... | ||

| + | !$omp end parallel | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | === For Worksharing === | ||

| + | |||

| + | In the above program all threads would execute the entire structured block in parallel. Obviously, this does not result in a speedup of the program unless worksharing is applied. The most commonly used OpenMP Worksharing construct is ''for'', which allows splitting up loops and distributing the work over all threads. | ||

| + | |||

| + | {| class="wikitable" style="width: 60%;" | ||

| + | | '''C/C++''' | ||

| + | <syntaxhighlight lang="c"> | ||

| + | int i; | ||

| + | #pragma omp parallel num_threads(4) | ||

| + | { | ||

| + | #pragma omp for | ||

| + | for (i = 0; i < 100; i++) | ||

| + | { | ||

| + | a[i] = b[i] + c[i]; | ||

| + | } | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | | '''Fortran''' | ||

| + | <syntaxhighlight lang="fortran"> | ||

| + | INTEGER :: i | ||

| + | !$omp parallel num_threads(4) | ||

| + | !$omp do | ||

| + | DO i = 0, 99 | ||

| + | a[i] = b[i] + c[i] | ||

| + | END DO | ||

| + | !$omp end parallel | ||

| + | </syntaxhighlight> | ||

| + | |} | ||

| + | |||

| + | In the above example with 100 iterations and 4 threads the iterations would be split into 4 equally sized chunks (0,24), (25,49), (50,74) and (75,99). Other distributions can be specified by using the ''schedule clause'': | ||

| + | |||

| + | * <code>schedule(static [, chunk])</code>: Iteration space divided in to blocks of size chunk and assigned to threads in a round-robin fashion. (default #threads blocks) | ||

| + | * <code>schedule(dynamic [, chunk])</code>: Iteration space split into blocks of size chunk (default 1) and assigned to threads in the order in which they finish previous blocks | ||

| + | * <code>schedule(guided, [, chunk])</code>: similar to dynamic, but block size decreases exponentially from an implementation-defined value to chunk | ||

| + | |||

| + | |||

| + | However, not all loops can be parallelized this way. In order to split the workload as shown there must not be any dependencies between the iterations, i.e. no thread may write to a memory location that another thread reads from. If in doubt, check if a backward exectuion of the loop would have the same result. Pleas not that this is only a simple test that works for most cases, but not all! | ||

| + | |||

| + | Another important and frequently used clause is the ''reduction'' clause (e.g. <code>reduction(+:sum)</code>). This is used if for example the result of all iterations should be summed up and stored in a single shared variable. The reduction clause creates private variables for all threads which are then combined and stored into the shared variable once all threads have finished. | ||

| + | |||

| + | === Data Scoping === | ||

| + | |||

| + | In OpenMP, variables are divided into shared and private. This can be set when creating a parallel region or ''for''-construct. The default for parallel regions is shared while all variables created within a for-construct are private. Static variables are also shared. The following options are available: | ||

| + | |||

| + | * <code>shared(VariableList)</code>: one shared instance that can be read from and written to by all threads | ||

| + | * <code>private(VariableList)</code>: a new uninitialized instance is created for each thread | ||

| + | * <code>firstprivate(VariableList)</code>: a new instance is created for each thread and initialized with the Master's value | ||

| + | * <code>lastprivate(VariableList)</code>: a new uninitialized instance is created for each thread and the value of the last loop iteration is written back to the Master | ||

| + | |||

| + | Global/static variables can also be privatized by using <code>#pragma omp threadprivate(VariableList)</code> or <code>!$omp threadprivate(VariableList)</code>. This creates an instance for each thread ''before'' the first parallel region is reached which will exist until the program finishes. Please note that this does not work too well with nested parallel regions, so it is recommended to avoid using threadprivate and static variables. | ||

| + | |||

| + | === Synchronization === | ||

| + | |||

| + | |||

| + | |||

| + | == Examples for OpenMP programs == | ||

| + | |||

OpenMP programming is mainly done with pragmas: | OpenMP programming is mainly done with pragmas: | ||

<syntaxhighlight lang="c"> | <syntaxhighlight lang="c"> | ||

| Line 22: | Line 121: | ||

== Materials == | == Materials == | ||

| + | |||

| + | |||

| + | |||

[https://doc.itc.rwth-aachen.de/download/attachments/35947076/01_IntroductionToOpenMP.pdf Introduction to OpenMP from PPCES (@RWTH Aachen) Part 1: Introduction] | [https://doc.itc.rwth-aachen.de/download/attachments/35947076/01_IntroductionToOpenMP.pdf Introduction to OpenMP from PPCES (@RWTH Aachen) Part 1: Introduction] | ||

Revision as of 14:39, 5 March 2019

OpenMP is an open standard API for Shared Memory parallelization in C, C++ and Fortran which consist of compiler dircetives, runtime routines and environment variables. The first version was published for Fortran in 1997 with the C/C++ standard following a year later. In 2000, OpenMP 2.0 was introduced. For the first two versions the main focus had been on parallelizing highly regular loops, which were considered the main performance limitation for scientific programs. OpenMP 3.0 introduced the concept of tasking in 2008 while OpenMP 4.0 added support for accelerators in 2013. The current version OpenMP 5.0 was released only in November 2018 and is therefore not yet fully supported by all compilers and OSes.

Information on how to run an existing OpenMP program can be found in the "How to Use OpenMP"-Section.

General

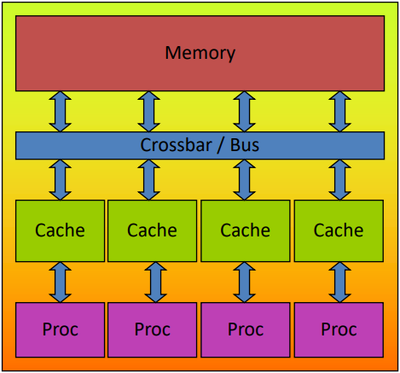

In the OpenMP Shared-Memory Parallel Programming Model all processors/cores access a shared main memory and employ multiple threads.

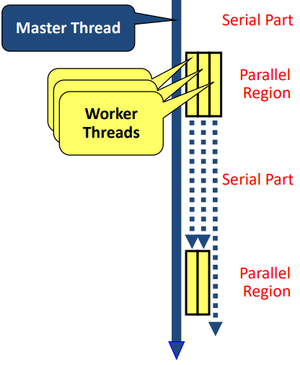

A program is launched with one single thread as a master thread. When entering a parallel region, worker threads are spawned and form a team with the master. Upon leaving the parallel region these worker threads are put to sleep by the OpenMP runtime until the program reaches its next parallel region. This concept is known as Fork-Join and it allows incremental parallelization. As a result all parallel regions must be defined explicitly. Furthermore there may only be exactly one entry point at the top of the region and exactly one exit point at the bottom and Branching out is not allowed. Termination, however, is allowed. The number of work threads to be spawned must be defined at the entry point. If it is not specified, OpenMP will use the environment variable OMP_NUM_THREADS.

OpenMP Constructs

Parallel regions

OpenMP functionalities are added to a program by use of pragmas. For example, the following pragma defines a parallel region with 4 threads.

C/C++

#pragma omp parallel num_threads(4)

{

...

structured block

...

}

|

Fortran

!$omp parallel num_threads(4)

...

structured block

...

!$omp end parallel

|

For Worksharing

In the above program all threads would execute the entire structured block in parallel. Obviously, this does not result in a speedup of the program unless worksharing is applied. The most commonly used OpenMP Worksharing construct is for, which allows splitting up loops and distributing the work over all threads.

C/C++

int i;

#pragma omp parallel num_threads(4)

{

#pragma omp for

for (i = 0; i < 100; i++)

{

a[i] = b[i] + c[i];

}

}

|

Fortran

INTEGER :: i

!$omp parallel num_threads(4)

!$omp do

DO i = 0, 99

a[i] = b[i] + c[i]

END DO

!$omp end parallel

|

In the above example with 100 iterations and 4 threads the iterations would be split into 4 equally sized chunks (0,24), (25,49), (50,74) and (75,99). Other distributions can be specified by using the schedule clause:

schedule(static [, chunk]): Iteration space divided in to blocks of size chunk and assigned to threads in a round-robin fashion. (default #threads blocks)schedule(dynamic [, chunk]): Iteration space split into blocks of size chunk (default 1) and assigned to threads in the order in which they finish previous blocksschedule(guided, [, chunk]): similar to dynamic, but block size decreases exponentially from an implementation-defined value to chunk

However, not all loops can be parallelized this way. In order to split the workload as shown there must not be any dependencies between the iterations, i.e. no thread may write to a memory location that another thread reads from. If in doubt, check if a backward exectuion of the loop would have the same result. Pleas not that this is only a simple test that works for most cases, but not all!

Another important and frequently used clause is the reduction clause (e.g. reduction(+:sum)). This is used if for example the result of all iterations should be summed up and stored in a single shared variable. The reduction clause creates private variables for all threads which are then combined and stored into the shared variable once all threads have finished.

Data Scoping

In OpenMP, variables are divided into shared and private. This can be set when creating a parallel region or for-construct. The default for parallel regions is shared while all variables created within a for-construct are private. Static variables are also shared. The following options are available:

shared(VariableList): one shared instance that can be read from and written to by all threadsprivate(VariableList): a new uninitialized instance is created for each threadfirstprivate(VariableList): a new instance is created for each thread and initialized with the Master's valuelastprivate(VariableList): a new uninitialized instance is created for each thread and the value of the last loop iteration is written back to the Master

Global/static variables can also be privatized by using #pragma omp threadprivate(VariableList) or !$omp threadprivate(VariableList). This creates an instance for each thread before the first parallel region is reached which will exist until the program finishes. Please note that this does not work too well with nested parallel regions, so it is recommended to avoid using threadprivate and static variables.

Synchronization

Examples for OpenMP programs

OpenMP programming is mainly done with pragmas:

#include <stdio.h>

int main(int argc, char* argv[])

{

#pragma omp parallel

{

printf("Hallo Welt!\n");

}

return 0;

}

interpreted by a normal compiler as comments, these will only come into effect when a specific compiler (options) is utilized like detailed here.

Please check the more detailed tutorials in the References.

Materials

Introduction to OpenMP from PPCES (@RWTH Aachen) Part 1: Introduction

Introduction to OpenMP from PPCES (@RWTH Aachen) Part 2: Tasking in Depth

Introduction to OpenMP from PPCES (@RWTH Aachen) Part 3: NUMA & SIMD

Introduction to OpenMP from PPCES (@RWTH Aachen) Part 4: Summary