Difference between revisions of "Computer architecture for software developers"

| Line 37: | Line 37: | ||

== Links and further information == | == Links and further information == | ||

| + | |||

| + | * John Hennessy David Patterson: [https://www.elsevier.com/books/computer-architecture/hennessy/978-0-12-811905-1 Computer Architecture] - A Quantitative Approach. Still the standard text book on computer architecture | ||

Revision as of 14:41, 2 July 2019

Introduction

Modern computers are still build following the "Stored Program Computing" design. The idea for stored program computers originates in the second world war, first working systems where build in the late fourties. Several earlier innovations enabled this machine: the idea of a universal Turing machine (1936), Claude E. Shannon's Master thesis on using arrangements of relays to solve Boolean algebra problems (1937) and Konrad Zuse anticipated in two patent applications that machine instructions could be stored in the same storage used for data (1936).

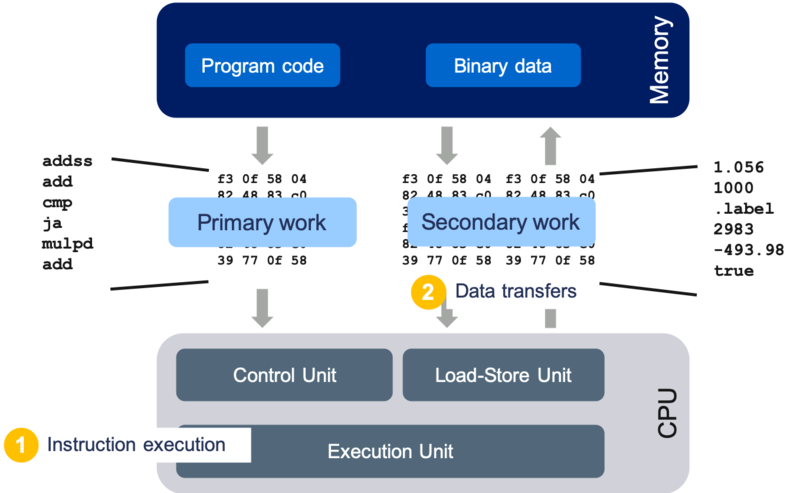

A stored program computer is controlled by instructions, the set of instructions a computer supports is also called instruction set architecture (ISA). Instructions are elementary operations as adding two numbers, loading data from memory or jumping to another location in the program code. Instructions are just integer numbers and are stored in a unified memory that also holds the actual data used by a program. A program consists of a sequential stream of instructions loaded from memory that control the hardware. The primary resource (or work) of a computer is therefore instruction execution. Some of those instructions load or store data from memory. To transfer data from memory is therefor a secondary resource of a computer triggered by instruction execution. The reason why this design was so successful lies in its generic application to any problem and the possibility to switch very fast between different applications. Everything in a computer is built of switches and as a consequence all numbers are represented in binary format. Because switches only know two states the operation of a computer is strictly clocked running with a certain fixed frequency. This generic nature of using a programmable machine together with extreme miniaturisation of the hardware enabled the application of computers in all aspects of our life.

Illustration of Stored Program Computers:

The generic nature of stored program computers is also their biggest problem when it comes to speeding up execution of program code. As a program code can be any mix of available instructions the properties of software and how it interacts with the hardware can vastly differ. Computer architects have chosen a pragmatic approach to speed up execution: "Make the common case fast". They analyse the software that runs on a computer and come up with improvements for this class of software. This also means that every hardware improvement makes assumptions against the software.

In the following computer architecture is explained on a level that is relevant for software developers to understand the interaction of their software with the hardware.

Core architecture

Instruction level parallelism

Memory hierarchy

Simultaneous multithreading

Data parallel execution units (SIMD)

Putting it all together

Node architecture

Multicore chips

Cache-coherent non-uniform memory architecture (ccNUMA)

Node topology

System architecture

Links and further information

- John Hennessy David Patterson: Computer Architecture - A Quantitative Approach. Still the standard text book on computer architecture