Background Performance Monitoring Considerations

Components

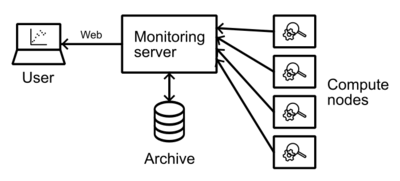

For background job Performance Monitoring, in general, a central server for data collection is necessary that receives the measured data from collector services running on each compute node. The central server processes the data, aggregates values, serves plots to users and archives the data in the monitoring data storage.

Depending on the concrete background monitoring software, the central server runs multiple components or multiple components run on multiple servers. Usually a database component is used to manage job metadata. Also the actual measurement data could be stored as files or managed by a time-series database component.

Sampling interval

Selection of the sampling interval is a trade-off between data resolution and measurement overhead. Since the monitoring runs as software on the compute nodes, it has an influence on the performance during job execution and energy consumption during idling phases (program execution prevents CPUs from entering idle mode). Also, the metrics can't be measured at exactly the same time and are rather measured one after another. Some metrics might also have an internal minimal sampling interval and return the same value for lower sampling intervals. For a very detailed performance analysis, a job can be repeated with an explicit Performance profiling, while the background job monitoring is useful for rough insights and a coarse overview.

The sampling interval is also an important factor for the storage considerations discussed below.

Performance metrics and storage considerations

For the monitoring, a set of Performance metrics for measurement has to be selected. Per node the metrics will consist of a number of sampled values per interval. The number of values depends on the node's hardware and the level of the metric. For example, certain metrics such as CPU time can be recorded per core or aggregated to one value for the complete node. As such, the number of sampled values depends on the job length, sampling interval, metric selection and hardware specifics (number of cores, sockets, accelerators).

The hardware specifics, such as number of nodes, cores and accelerators per node are fixed for your cluster. Therefore, the sampling interval, the selection of metrics and the metric level's are the parameters available to tune the storage requirements of the monitoring system. One can also consider cut offs in the monitoring configuration if the monitoring software supports that. For example, only archive data for jobs running longer than a minimal time or store only aggregated values for jobs using more than a certain number of nodes. Also, compression of archival data can save a lot of storage space.

With the increasing number of cores per node, the core-level metrics became a dominating factor in the number of samples per node. Example calculation:

- 1000 nodes, each with 2 sockets per node and 128 cores per node

- 30 seconds sampling interval

- 5 core-level metrics (128 values per node and inteval)

- 11 node-level metrics (11 values per node and interval)

- 6 socket-level metrics (2 values per node and interval)

1 hour of measurement over all nodes are dominated by the number of core metrics:

((60 * 60)/30) * (1'000*11 + 2'000*1 + 128'000*5) = 1'320'000 + 480'000 + 76'800'000 = 78600000 samples

Meta-data might contain longer text data, such as job scripts and environment variable values, but is usually far smaller than the measurement values and does not scale with the job duration.