Difference between revisions of "Benchmarking & Scaling Tutorial/Automated Benchmarking"

m (Setting 'next' link to JUBE page) |

|||

| (3 intermediate revisions by one other user not shown) | |||

| Line 3: | Line 3: | ||

__TOC__ | __TOC__ | ||

| − | == | + | == Writing a Bash script == |

While still being logged in at our interactive session on the compute node, we can start writing a Bash script to automate running simulations with different amounts of cores. After all we want to know how our simulation scales and determine the speedup. | While still being logged in at our interactive session on the compute node, we can start writing a Bash script to automate running simulations with different amounts of cores. After all we want to know how our simulation scales and determine the speedup. | ||

| Line 41: | Line 41: | ||

| − | We also want to write out the used number of cores and the measured time for each run to the console (stdout) using a <code>printf</code> statement. In addition we add a little header to know want the numbers actually mean | + | We also want to write out the used number of cores and the measured time for each run to the console (stdout) using a <code>printf</code> statement. In addition we add a little header before the loop to know want the numbers actually mean |

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

| Line 67: | Line 67: | ||

echo "# Cores Time/s" | echo "# Cores Time/s" | ||

for P in 72 66 60 54 48 42 36 30 24 18 12 8 4 2 1; do | for P in 72 66 60 54 48 42 36 30 24 18 12 8 4 2 1; do | ||

| − | for i in $(seq 5); | + | for i in $(seq 5); do |

START=$(date +%s.%N) | START=$(date +%s.%N) | ||

srun --cpu-bind=threads -n $P $GMX > gromacs.log 2>&1 | srun --cpu-bind=threads -n $P $GMX > gromacs.log 2>&1 | ||

| Line 79: | Line 79: | ||

| + | We can save this script in a file called e.g. <code>job.sh</code> and run it via the bash command. To see the output in the terminal and save it to file at the same time we pipe it to the <code>tee</code> command | ||

| + | |||

| + | bash job.sh | tee timings.dat | ||

| + | |||

| + | The output will look similar to this | ||

| + | |||

| + | [[File:Benchmark_tutorial_gromacs_5.png|600px]] | ||

| + | |||

| + | |||

| + | == Transform to a job script == | ||

| + | |||

| + | We can easily transform this into a SLURM job script by adding the necessary <code>SBATCH</code> pragmas. Here we also show you the job script to only use ''physical'' cores instead of all threads per core by using <code>--cpu-bind=cores</code> and reducing the total amount of cores to 36. Note that we still request ''all logical cores'' to receive a full node. Adjust the <code>SBATCH</code> pragmas for your site! | ||

| + | |||

| + | <syntaxhighlight lang="bash"> | ||

| + | #!/bin/bash | ||

| + | |||

| + | #SBATCH --nodes=1 | ||

| + | #SBATCH --ntasks-per-node=72 | ||

| + | #SBATCH --partition=express | ||

| + | #SBATCH --time=01:00:00 | ||

| + | #SBATCH --job-name=benchmark | ||

| + | #SBATCH --output=benchmark_results_noHT.out | ||

| + | |||

| + | # Load all needed modules (adjust to your specific site!) | ||

| + | module load GROMACS | ||

| + | |||

| + | GMX="gmx_mpi -quiet mdrun -deffnm MD_5NM_WATER -nsteps 10000 -ntomp 1 -pin on " | ||

| + | |||

| + | echo "# Cores Time/s" | ||

| + | for P in 36 30 24 18 12 8 4 2 1; do | ||

| + | for i in $(seq 5); do | ||

| + | START=$(date +%s.%N) | ||

| + | srun --cpu-bind=core -n $P $GMX > gromacs.log 2>&1 | ||

| + | END=$(date +%s.%N) | ||

| + | RUNTIME=$(echo "$END - $START" | bc -l) | ||

| + | printf "%3d %5.2f\n" $P $RUNTIME | ||

| + | done | ||

| + | done | ||

| + | |||

| + | </syntaxhighlight> | ||

---- | ---- | ||

| − | '''Next''': [[Benchmarking_%26_Scaling_Tutorial/ | + | '''Next''': [[Benchmarking_%26_Scaling_Tutorial/Automated_Benchmarking_JUBE | Automated Benchmarking using JUBE ]] |

'''Previous''': [[Benchmarking_%26_Scaling_Tutorial/Manual_Benchmarking | Interactive Manual Benchmarking ]] | '''Previous''': [[Benchmarking_%26_Scaling_Tutorial/Manual_Benchmarking | Interactive Manual Benchmarking ]] | ||

Latest revision as of 15:39, 17 November 2022

| Tutorial | |

|---|---|

| Title: | Benchmarking & Scaling |

| Provider: | HPC.NRW

|

| Contact: | tutorials@hpc.nrw |

| Type: | Online |

| Topic Area: | Performance Analysis |

| License: | CC-BY-SA |

| Syllabus

| |

| 1. Introduction & Theory | |

| 2. Interactive Manual Benchmarking | |

| 3. Automated Benchmarking using a Job Script | |

| 4. Automated Benchmarking using JUBE | |

| 5. Plotting & Interpreting Results | |

Writing a Bash script

While still being logged in at our interactive session on the compute node, we can start writing a Bash script to automate running simulations with different amounts of cores. After all we want to know how our simulation scales and determine the speedup.

We will start by loading all needed modules and defining a variable GMX to hold our complete GROMACS command

#!/bin/bash

# Load all needed modules (adjust to your specific site!)

module load GROMACS

GMX="gmx_mpi -quiet mdrun -deffnm MD_5NM_WATER -nsteps 10000 -ntomp 1 -pin on "

Next we will create a loop over different amounts of cores our simulation should run with. It is advisable to start with the highest core count first, as these take the least amount of time. Which core counts you want to test depends on the system you are running on. Make sure to include the serial case (1 core) and the full node. In the first example we will use all logical cores (i.e. use hyperthreading). It is also a good idea to test a full socket, in this case 36 cores. We will also tell srun to bind processes to threads to make sure we are actually using all threads of a physical core even with lower core counts.

for P in 72 66 60 54 48 42 36 30 24 18 12 8 4 2 1; do

srun --cpu-bind=threads -n $P $GMX

done

We could now run this and collect the performance metrics GROMACS itself provides, however this will neglect the time to spawn all processes. Therefore we will time the runtime ourselves, which should also be useful for codes that do not provide any metrics directly. To do this we will use the builtin date function before and after the simulation and calculate the time in between. In addition we will redirect the output of GROMACS to a file. Note that GROMACS writes to stderr so we have to redirect it using 2>&1

for P in 72 66 60 54 48 42 36 30 24 18 12 8 4 2 1; do

START=$(date +%s.%N)

srun --cpu-bind=threads -n $P $GMX > gromacs.log 2>&1

END=$(date +%s.%N)

RUNTIME=$(echo "$END - $START" | bc -l)

done

We also want to write out the used number of cores and the measured time for each run to the console (stdout) using a printf statement. In addition we add a little header before the loop to know want the numbers actually mean

echo "# Cores Time/s"

for P in 72 66 60 54 48 42 36 30 24 18 12 8 4 2 1; do

START=$(date +%s.%N)

srun --cpu-bind=threads -n $P $GMX > gromacs.log 2>&1

END=$(date +%s.%N)

RUNTIME=$(echo "$END - $START" | bc -l)

printf "%3d %5.2f\n" $P $RUNTIME

done

As a last step, we want to repeat the simulation for a specific core count five times to account for any outliers. The final script now looks like this

#!/bin/bash

# Load all needed modules (adjust to your specific site!)

module load GROMACS

GMX="gmx_mpi -quiet mdrun -deffnm MD_5NM_WATER -nsteps 10000 -ntomp 1 -pin on "

echo "# Cores Time/s"

for P in 72 66 60 54 48 42 36 30 24 18 12 8 4 2 1; do

for i in $(seq 5); do

START=$(date +%s.%N)

srun --cpu-bind=threads -n $P $GMX > gromacs.log 2>&1

END=$(date +%s.%N)

RUNTIME=$(echo "$END - $START" | bc -l)

printf "%3d %5.2f\n" $P $RUNTIME

done

done

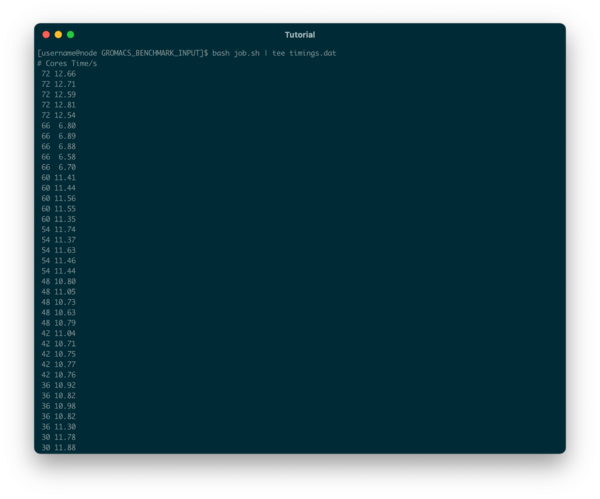

We can save this script in a file called e.g. job.sh and run it via the bash command. To see the output in the terminal and save it to file at the same time we pipe it to the tee command

bash job.sh | tee timings.dat

The output will look similar to this

Transform to a job script

We can easily transform this into a SLURM job script by adding the necessary SBATCH pragmas. Here we also show you the job script to only use physical cores instead of all threads per core by using --cpu-bind=cores and reducing the total amount of cores to 36. Note that we still request all logical cores to receive a full node. Adjust the SBATCH pragmas for your site!

#!/bin/bash

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=72

#SBATCH --partition=express

#SBATCH --time=01:00:00

#SBATCH --job-name=benchmark

#SBATCH --output=benchmark_results_noHT.out

# Load all needed modules (adjust to your specific site!)

module load GROMACS

GMX="gmx_mpi -quiet mdrun -deffnm MD_5NM_WATER -nsteps 10000 -ntomp 1 -pin on "

echo "# Cores Time/s"

for P in 36 30 24 18 12 8 4 2 1; do

for i in $(seq 5); do

START=$(date +%s.%N)

srun --cpu-bind=core -n $P $GMX > gromacs.log 2>&1

END=$(date +%s.%N)

RUNTIME=$(echo "$END - $START" | bc -l)

printf "%3d %5.2f\n" $P $RUNTIME

done

done

Next: Automated Benchmarking using JUBE

Previous: Interactive Manual Benchmarking